Here's the recording from our DevOps “Office Hours” session on 2020-02-05.

We hold public “Office Hours” every Wednesday at 11:30am PST to answer questions on all things DevOps/Terraform/Kubernetes/CICD related.

These “lunch & learn” style sessions are totally free and really just an opportunity to talk shop, ask questions and get answers.

Register here: cloudposse.com/office-hours

Basically, these sessions are an opportunity to get a free weekly consultation with Cloud Posse where you can literally “ask me anything” (AMA). Since we're all engineers, this also helps us better understand the challenges our users have so we can better focus on solving the real problems you have and address the problems/gaps in our tools.

Machine Generated Transcript

Let's get the show started.

Welcome to Office hours.

It's February 5th 2020.

My name is Eric Osterman and I'll be meeting conversation.

I'm the CEO and founder of cloud posse.

We are a DevOps accelerator.

We help startups own their infrastructure by building it for you and then showing you the ropes.

For those of you new to the call the format is very informal.

My goal is to get your questions answered.

So feel free to unleash yourself at any time if you want to jump in and participate.

If you're tuning in from our podcast or on our YouTube channel, you can register for these live and interactive sessions by going to cloud pop second slash office hours.

We all these we host these calls every week and will automatically post a video recording of this session to the office hours channel as well as follow up with an email.

So you can share it with your team.

If you want to share something in private.

Just ask.

And we can temporarily suspend the recording.

And with that said, let's kick it off.

So I don't have really any demos prepared for today or more interesting talking points.

One of the things that we didn't get to cover on the last call or maybe what some of the interesting DevOps interview questions are what are some of your favorites.

Interestingly enough.

This has come up a couple of times lately in the jobs channel either people going in for hiring or people using some of these questions in their recent interviews.

So I'd like to hear that when Brian Tye who's a regular meals.

He hasn't joined yet.

So we'll probably cover that as soon as he joins.

He has been working on adopting Prometheus with Griffin on EFS but he has a very unique use case compared to others.

He does ephemeral blue green clusters for their environments and he's had a challenge using the CSS provision or on ephemeral clusters.

So you need a different strategy.

So he's got to talk about what that strategy could look like.

But I'm going to wait until they grow quicker.

Ender I work with Brian, I'll grab him and love.

Now OK, awesome.

Thanks Oh, yeah Andrea.

All right.

In the meantime, let's turn mic over.

Anybody else have some questions something interesting.

They're working on something, I want to share.

It's really open ended.

Well, I'm putting together.

I've been doing this as a skunk works at the office for the last couple of months.

But I'm putting together like a showcase of open source DevOps.

They don't have to be open source, but they have UPS tools.

OK So if anybody wants to anybody has something they want to contribute or any experiments they want to run or anything like that.

There Welcome to do that.

Cool can you just mention that in the office hours channel so that they know how to read you so Mike going to put you on the spot here.

What are you working on.

How'd you end up ending up in arms.

Yeah, so I'm we.

My companies recently started using Terraform and we found that cloud policy posi templates to be very helpful, especially when learning at all.

And so I just came here to kind of find out some practices beyond what's available online and a bunch of us on our team actually read the Terraform up and running the second edition.

Yes, that's a good one.

Yeah Yeah.

So we're just trying and more media specifically under trying to find best practices.

And I guess one of my questions was going to be, how does everybody kind of lay out their terror Terraform like I understand the concept of modules being reusable but next step is like defining different environments.

So like we're going to be using separate AWS accounts for different environments.

And so just wanted to get to more expert advice from you guys are just also just learn more about DevOps in general.

Yeah, it's a broad definition.

Nobody has an accurate their own.

Everybody has their own definition of DevOps.

So Yeah, it's loaded.

Really we don't even have to talk about that.

I go there to block all.

So Yeah, it's a hot topic.

I'm sure a lot of people here you can share kind of what their structure is.

There's no like canonical source of truth and there's been I would also like to say that there has been a pretty standardized convention for how layout projects for Terraform that I think is being challenged with the release of Terraform cloud.

And what I want to point out here is that.

So they actually caught dogs and gotten a lot better, especially like becoming a little bit more opinionated or idiomatic on how to arrange things.

And that's a good thing because they're the maintainers of.

So one of things I came across a few months ago when I was looking to explain this to a customer was, what the different strategies for.

And here, they kind of lay out, for example, the common repo architectures as my mouse pings and I can't scroll down.

This is the problem of screen sharing with do it.

All right.

I'll wait for that to wake up and continue talking what it lays out here is basically three strategies.

One is the strategy basically that hashi corp. is now recommending which you can briefly see at the top of my screen here, which is using them on our people or using kind of poorly mono repos.

What I mean by that is maybe breaking it out.

Maybe by a purpose.

So maybe you have one repository for networking one repository for some teams project or something like that.

But what you do is you don't have environment.

Let me see if I can.

Everyone Waking up as my mouse is going crazy there you go.

So multiple workspaces for people.

So what.

What has she caught.

But started doing was saying, hey, let's use work the workspaces concept for environments.

Even though originally, they said in their documentation don't use workspaces this way.

So I don't know if you saw it anywhere but now they've done a mea culpa on that an about face and we've been taking a second look at this.

And I think that it's making projects a lot easier actually for developers to understand when you just have the code and you just have the configuration and you don't conflate the two.

So the other approach is like more traditional and development, you have maybe a branch for each environment.

There's a controversial thing as well.

I don't like it because you have long live branches and keeping them in sync and merge conflicts and managing that they don't diverge is not.

It still takes extra effort.

Also, if you're an organization that leaves and trunk based development, then the branch mile won't long based long live branches like this isn't ideal.

And then there's the directory structure.

This is what's going to get to.

It's like this has been the canonical way of organizing Terraform projects maybe in large part because you know grunt works has a lot of influence in this area and with the usage of terror grants and tools like that.

This has been a good way of doing it, but there's some problems with this.

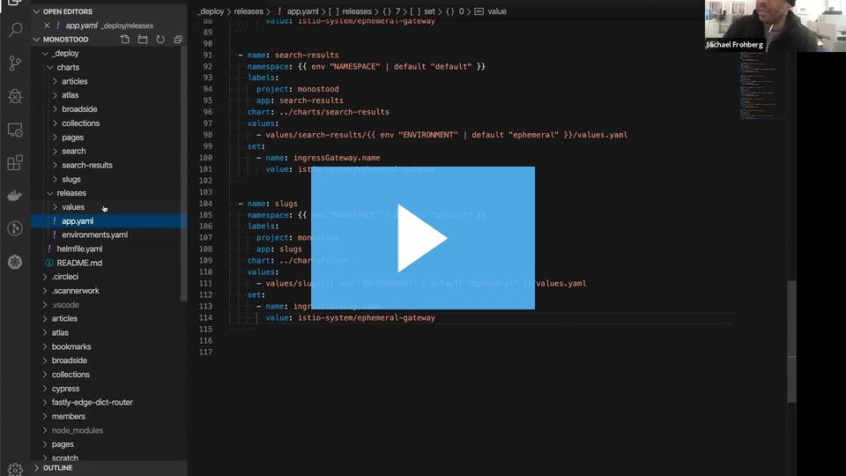

So what I like about it.

First of all, as you have the separate modules folder right in the modules folder has your what we call route modules that top level implications.

And those are reusable.

That's like your service catalog the developers.

And then you have your environments here and those kind of describe everything that you have for production everything that you have for staging and like these might be broken out more like you should have project folders enterprise.

You wouldn't have them.

This would be considered like a monolithic project or terror list.

You don't want triplets right.

So you underpriced you maybe have VPC you'd have maybe chaos and you'd have maybe some other project or service that you have.

And then under there all the Terraform code.

The problem is that these things still end up diverging because you have to remember to open for requests to promote those changes to all to all the environments, or you have to create one heavy.

Pull request that modifies all those environments.

This has been a big pain for us.

Even at cloud passes.

So we started off with an interesting approach a cloud posse which is, which is to organize accounts treat accounts like applications.

And in that case when I say accounts.

I mean, Amazon accounts.

So like the root account or your master account is one configuration you have your staging configuration, your core configuration, your data configuration.

And what I love about this is you have like a strict shared nothing approach.

Even the good history shares nothing and you share nothing has kind of been this holy grail to reduce the blast radius.

The other things like when your web hooks fire.

They only fire on the dev account.

And because we have these strict partitions there's no accidental mistakes of updating the wrong environment.

And every change is explicit.

Now there is a great quote in a podcast that just came out the other week, whatever the change log and they interview Kelsey Hightower on like I think the top was like the future is model.

And this is the constant battle of bundling and unbundling and bundling and unbundling is like basically, I guess you get anywhere you go to consolidate and then expand.

And then you expand and you realize that that can work well you consolidate and you expand it and you say so.

But my point here is more like one of the things he said, and that was like the problem with microprocessors is that it requires the developers to have a certain level of rigor.

I'm paraphrasing my own words.

It's asking it in an organization that wasn't practicing it before.

So how are they going to get it right.

This time by moving to microservices.

I want to find the exact quote somewhere maybe somebody can post office hours if they have it handy.

But that was it.

So that's the thing here.

What I describe here.

This is beautiful.

And when you have a well oiled machine that is excellent at keeping track and promoting changes to all your environments and no change is left behind, then it works well, but this is an unrealistic expectation.

So that's why I'm we're considering the Hasse corp. recommended this practice now under using multiple workspaces repo and under this model when you open up a pull request kind of what you see is OK, here's what's going to happen in production staging and anywhere else you're using that code because inadvertently you might have drift either from know human monkey patching going into the console or that maybe applies failed in some environments.

And that was never addressed.

So now you have diverged that way.

That's a valid error or maybe you have other Terraform code that is imported to state or something.

And it's manipulating those same resources.

There's been bugs and Terraform dividers and all that stuff.

So I want to see when I open up a board with what's going to happen to all those environments that that's what I really like about this workspace approach.

That's the opinion we keep getting like we'll see people that broke it out in a similar way that I can do Adam cat home experts where you'll have like the different end of US accounts and then it's free and it's interesting to me that you're like, well, now we're going to try this multiple workspaces so tight.

Where do I go.

Where do you go from here.

And you're right to feel frustrated and you know the ecosystem is in frustration towards you.

No no I know, just in general.

I would say like you know in software development more or less the best practices for how to do your pipeline some promote code is very well understood.

And we're trying to adapt some of those same things to infrastructure.

The problem is that we're different.

We're operating at different points in the software development lifecycle and the capabilities at our disposal are different.

So let's take, for example, infrastructure infrastructure.

But if you listen to this podcast you talk to us.

I always talk about this.

It's like the four layers of infrastructure you got your foundational infrastructure you shared services.

So you've got your platform and then on top of your platform you've got your shared services.

And then the last layer is your applications, most people working with this stuff.

Assume layers one through 3 exists and don't have to worry about it.

It's a separation of concerns.

All they care about is deploying their app.

They don't have any sympathy or empathy for how you manage Layers 1 through 3.

But if you're in our industry, that's one of the biggest problems is that we have to maintain stability of the platform while also providing the ability to run multiple environments for developers and stuff like that.

So my reason for bringing up all of this is that like Terraform as a tool doesn't work like deploying your standard microservice your standard go rails node app or whatever.

Like if you to play.

Note app, and it fails, you can roll back or you didn't even need to expose that error at all because your health checks caught it and you're running parallel versions of all that all that's offshore et cetera when you're dealing with infrastructure and using a tool like Terraform, it's a lot more like doing database migrations without transactions.

So there is no recourse.

So how do you operate when you're dealing with this really critical stuff in a place where you have poor recourse.

So you need good processes for that.

And let's see here.

So that's why we're still trying to figure this out.

I think as relates to DevOps what is the best course of action.

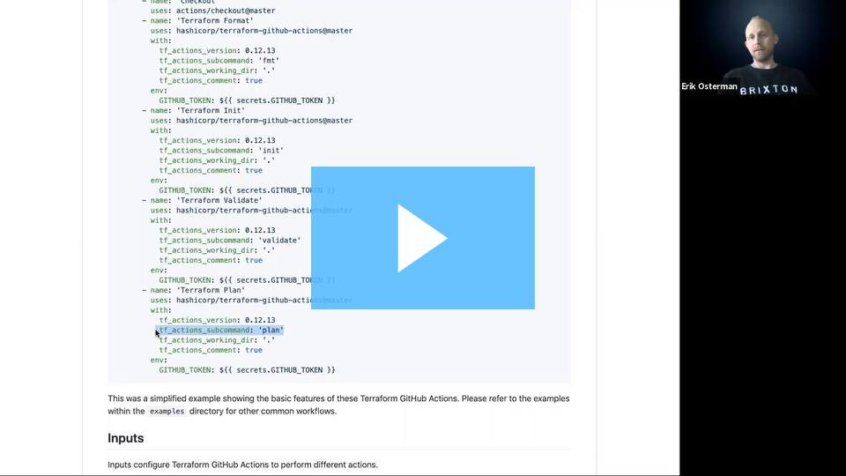

There's been Atlantis.

There's been Terraform cloud a lot of people are using Jenkins to roll this out.

Some people combine things like Spinnaker to it.

You can orchestrate the whole workflow, because the problem is you're standing CCD systems and the answer for this.

I don't think.

I don't think there is AI don't think anybody's just nailed it yet.

We're all so that it's a fair question if you go back to your cat home experts and you can click on one of those accounts.

I'm just curious now are you still referring to the cloud as opposed to modules or do you build modules within here.

No It's really all just config.

Both both.

So let me describe that a little bit more of what our strategy has been kind of like food for thought.

So So here I have one of these eight US accounts and under control, we have the projects.

So this is how we had kind of like the microservices of Terraform.

So it's not a monolith it's micro certain microservice architecture.

So to say, if I look in at any one of these we've been using a pattern similar to what like you'd get with grant works, which is where you have a centralized module repository.

So here we have the Terraform route modules and cloud posse.

So where you pull those root modules from really doesn't matter like you could have a centralized service catalog at cloud posse which works well for our customers because their customers are implementing.

I mean, our customers are implementing our way of doing.

Now if you know depending on how much experience and opinions you have about this stuff you could fork out modules you could start your own.

And what we have typically is the customer has their own modules.

We have our own modules and you kind of pick and choose from those here what we have is we're using environment variables with Terraform, which this pattern worked really well in Terraform 0 at eye level but in Terraform 0 12 for example Terraform broke a fundamental way we were using the product.

So when you run Terraform admits dash from module and you point out a module, you used to be able to initialize your current working directory with a remote module, even if your working directory had a file in it.

So that works really awesome.

We didn't need any wrappers we didn't need any tools.

But then Terraform 0 12 comes up and they say, no, the directory has to be empty.

Oh, and by the way, many Terraform commands like Terraform output.

Don't allow you to specify where your state directory is.

So that it could get the outputs.

So most commands you can say Terraform plan in this directorate Terraform applied this plan file or in the strategy Terraform it is in this directory.

But other commands like Terraform show.

I think and Terraform graph and Terraform output.

Don't allow you to specify the directory.

So there's an inconsistency in the interface of Terraform and then they broke the ability to initialize the local directory.

So anyways, my point with this is saying perhaps there is some confusion on our side as well, because the interface was changed underneath us.

So going back to your question under here.

So then if you go to this folder here and you go to like Terraform root modules here a bunch of opinionated implementations of modules for this company.

So here's the idea as the company grows in sophistication you're going to have more opinions on how you're going to do EMR how you're going to do Postgres how you do all those things.

And that's the purpose of this.

And then a developer all they need to know is that look, I can follow best practices.

If I point to that.

And then you DevOps team or people stakeholders in that with that label.

So to say, can be deciding what are the best practices for implementing those modules and then you've seen quite possibly them.

Here we have our terraforming modules.

Now our modules.

I want to preface this with.

We have these as kind of boiler plate examples for how to implement common things, but they're not necessarily the canonical best practice for implementing.

So our case root module here implements a basic case cluster with a single of scale group basically a single notebook.

Now that gets complicated right because starting companies they're going to need to know pool would use other.

And they're going to need a high memory node pool they're going to need a high you pool and how you mix and match that.

That's too opinionated for us to generalize Yeah.

Well, that's all I can say is yes.

Now, I guess.

And then what.

Yeah, and that's kind of what we're going through now just figuring out what works for us deciding on the different structures of everything and definitely taking advantage or looking at what you guys have already done and looking at a lot of things.

And just reading all over.

So yeah.

Well, that's a good read it posts.

You know everyone happens to blog and medium.

So take Jake out.

Exactly And think that's the other thing is just finding documentation on zero that 12 compared to zero 9/11.

And you know, refining my Google searches and only searching the past like five month type of thing.

That's a good point.

Yeah, there's not.

You can you can end up on a lot of outdated stuff, especially how fast and stuff.

So you know I was reading a blog from July of 2019 and blindly assumed that they were talking about doing about 12 when in fact, they were talking about zero 9/11.

So yeah, but I got to move on to the next question.

I see Brian Tyler joined us.

He's over at the audit board, and he's been working on setting up Prometheus on with the effects on ephemeral shortly clusters.

Kind of an interesting problem.

Those of you attended last few calls this Prometheus best thing has come up quite a lot.

I want to just kind of tee.

This question up a little bit and frame it for everyone.

So we called upon.

So we've been supporting a lot of different monitoring solutions, but we always come back to that yet Prometheus with refined eyes pretty awesome or Cuban community provides a lot of nice dashboards and it's proven to scale.

So one of the patterns we've been using that others here have used as well.

It works pretty well is to actually host the Prometheus time series database on each of us.

And I guess your mileage will vary and if you're running Facebook scale.

Yeah, you're probably going to need to use Daniels or whatever some bigger things.

But EFS is highly scalable.

It's POSIX compliant and it works ridiculously well with Prometheus for simple implementation.

The problem that Brian has is his clusters are totally ephemeral like when they do.

Roll out.

They bring up a whole new cluster and deploy a whole new stack to that.

And then verify that that validate that that works.

And shut down the old one.

And with Prometheus any offense.

Well, we've been using is the EFS provisionally and with the F has provisionally it'll automatically allocate a BBC system volume claim on your yet this file system.

Problem is those system ideas are unique.

They're generated by the company's platform.

So if you have several clusters how do you reattached any of this system.

If you're using the yet this provisional well the kicker is if you are doing it this way.

Well, then maybe the DFS provision provision or isn't the right tool.

You can still use CSS but the provision isn't going to be the right.

And instead, what you're going to need to do is mount the S file systems to the host using traditional methods amounting DFS fought for your operating system.

So if you're using if you're using cops you're going to use the hooks for when the service starts up and add a system hook that's going to mount that DFS file system to the host OS.

And now with Kubernetes what you can do is you can have you can mount the actual hosts volume that's been mounted there into your container and then you know what you're naming you are the decider of the naming convention at that point how you keep an secure and everything was Brian, is that clear.

I can talk more on that.

Yeah, no, that makes sense.

Yeah, it's unfortunate that I have to go that route.

But sometimes you don't get the turnkey solution.

Yeah, I mean so before the FSA provision or we were using this pattern like on core OS with Kubernetes for some time.

And it worked OK.

So I just wanted to point out for those who are not familiar with the cops manifest and capabilities that the cops manifest.

So under cloud positive reference architectures is kind of what we've been using in our consulting engagements to implement cognitive clusters with Cox.

I'm mostly speaking here to you.

Mike, who is looking for inspiration.

This here is doing that bolt poly repo architecture, which I'm undecided on at this point from being totally gung ho.

So we go.

So we.

So we go here now to the cops private topology, which is our manifest for cops.

What I love about cops is cops basically implemented a Kubernetes like API for managing Kubernetes itself.

So if you look at any C or D They're almost the same as these.

But then there's this escape hatch that works really well is that you can define system.

These units at startup that get installed.

So here you go.

Brian you'll create a system d unit that mount that that just calls exact start to mount the file system the file system.

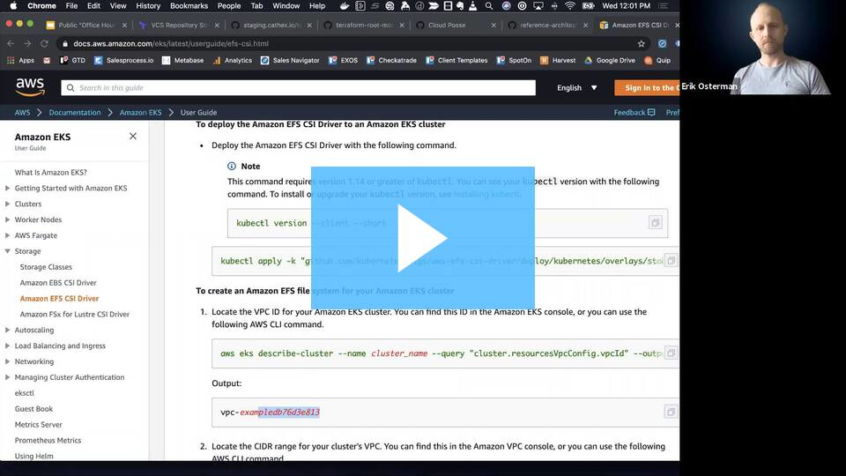

Yeah Have you guys looked at offense CSI driver.

No So maybe there's other maybe there's more elegant implementations like I pasted in the chat.

But it was what I was looking into next, which I believe could solve my problem for me, just because I create the PVR itself.

Then I can define the path for that person simple.

Oh, OK.

Yeah If you can define in a predictable path, then you're around it.

Yeah, it's just so long as long as you're in the territory of having random ideas then yeah.

I believe, if I'm green that persistent volume myself instead of doing the PDC route, then I can create the volume.

And then when I'm provisioning Prometheus I can just tell it, which volume to mount instead of going and doing and creating a PVC through Prometheus.

Operator OK.

I think that's the route I'm going to try first.

If not, then obviously, the Cox system D1 is the one that for sure will work.

Yeah, that's your that's plan B self.

Andrew Roth you might have some experience in this area.

Do you have anything to add to it.

I've never used a CSI driver if only used.

I've only used DFS provision prisoner in that deal.

Our very own certified cougar Kubernetes administrator.

Any insight here will.

Your initial thought was the tree my have to have decreased itself be attached.

And then have to continue from there.

The CSI driver before.

I've only just started to mess around with it.

But not enough experience to really say anything much.

Playing around with rook sort of when it's set.

All right.

Well, I guess that's the end of that question then unless you have any other thing you wanted to add to that, Brian.

No thank you.

Yeah Any other questions.

We got quite a lot of people on the call.

I just have a general question.

It's worn.

So I know in the company that I work for we use a lot of the love you guys repost.

I know a lot of it is revolving around AWS.

Now do you have any or do you plan on doing any repos for like maybe Digital Ocean.

That's a good question.

But fun fact is that we use a bit of Digital Ocean kind of for our own pieces to keep our costs low and manageable.

I don't know if where would we're going to really be investing directly in Digital Ocean because of who we cater towards which are more like well-heeled startups.

And they need serious infrastructure.

So I think Digital Ocean is awesome for pieces.

But I wouldn't want to based my $100 million startup on it.

Cool I do have like a low com and also we actually do use that data process of using the telephone cloud with the workspaces.

How how's that working out for you.

Anything you want to add.

Like a for use case or firsthand experience so far.

And pretty good.

You know anytime we have any issues with a certain, I guess stage, we just have to do a pull request requested that particular stage.

OK You just go from there.

And it's pretty simple.

You know because I've only been involved with a company with a little over year.

So which of the repo strategies are you guys using with Terraform cloud the repo strategy.

I guess, depending on the stage or so like we have stages like proud of you 80q a.

So like maybe 80 and support they all branched off from the master branch.

OK So you're using the branching strategy, then where were you a team would be a separate branch, not in git repo it'll all be on the same branch in the git repo.

OK So anything that's merged into master any structures then or using the official approach that they describe up here.

Once again, it sounds like a single branch like a master of the branch from that.

And then merge back to yes in the long run.

Yeah, but are the environments is you a team is you just a workspace or is you a team a project folder like this.

You said you a team will just be a workspace.

OK So yeah, you're using that strategy.

The first strategy we were talking about here multiple workspace.

I wanted to expand on that in that we have an older Terraform repo that has a few of them that are using workspaces but we have a lot of stuff that we're doing tarragon to manage it.

And I haven't sat down really to think about it.

But is there a best practice for managing workspaces VA terabyte.

I was exploring that the other day just in terms of EPBC and out of the box.

Terry Grant does not support workspaces.

It's anti pattern for them.

And I would say that based on what I said earlier that what they used to be the official anti pattern.

Like you don't use workspaces for this but even hashi corp has done an about face on this.

So terror.

I'm not sure if they're going to be adding support for it or merging or even addressing this topic.

However, I was kind of able to get it to work using.

And I don't know what the term was in tarragon but I think the equivalent of hooks right.

So I had a pre had a well, it was a prerequisite Yeah, for either for the plan or the net or whatever.

I just use a hook to select the workspace.

And then the or create the workspace.

If it did any if the selecting failed.

And that seemed to more or less work for me.

So the challenge is you need to call this other command, which is Terraform workspace, select or Terraform workspace new and using the hooks was one way to achieve that.

You see here in a terminal window.

I might still have that question on those using Terraform workspaces.

And I haven't been keeping too up to date with Terraform 12.

Have they made the change.

So that you can interpolate your state as to your state like location or not.

It's still not a thing that's the amount of things I can talk to that as well actually.

Another vehicle that kind of.

So the OK.

So for everyone's clarification.

Let's see here.

That's a good example.

So the Terraform state back in with S3 requires a number of parameters like the bucket name the role you need to assume to use that bucket key prefixes all that kind of stuff.

And one thing that we've been practicing a cloud policy has been this thing like share nothing right.

So here's an example.

Brian's talking about.

So you see this bucket name here.

You see this key prefix here.

You see the region.

Well, the shared nothing approach right.

You really don't want to share this bucket especially if you're having a multi region architecture.

And you to be able to administrator multiple regions into that one region is down you definitely have to have multiple state buckets right.

However, because Terraform to this day does not support interpolation basically using variables for this.

That's kind of limited how you can initialize the back.

So one of the things that they introduced in a relatively late version of Terraform like Terraform from or something was the ability to set all the back end parameters with command line arguments.

So you could say terraforming minute.

And I think the argument was like config and then back n equals.

Yeah bucket equals whether I know something.

And then.

But then there was still no way to standardize that.

So that's why a cloud posse we were using.

There's this thing that you can use.

It's going to type it out here.

So Terraform supports a certain environment variables.

So you could say export TF Kawhi are was kind of weird t if Clyde plan args I think it was equals and then here you could say like dash BackendConfig equals bucket equals like my bucket.

So because you had environment variables you could kind of get around the fact that you can use interpolation.

Are we clear up to this point before I kind of do my soul searching moment here for me, this is clearer.

Yeah OK.

So I'm just one voice.

Eric, are you trying to shoot your screen.

Yeah, you might need to.

It's definitely being shared because I see the green arrow around my window.

You might need if you're in Zoom you might need to tab around to see it.

Yeah, I can see it in here.

Yeah zoom is funky like that.

I see it now.

Yeah So you see here.

I might be off by some kick camel.

Some capitalization here somewhere maybe.

But it is more or less what you can do to get around it.

So you can stick that in and see you can stick that in your makefile.

You could have users, you could have your wrapper script.

OK And then the last option.

Yeah if you're using terror ground terror Brent will help set this up for you using bypassing these arguments for you in your terror.

I grant that each sealed.

So there are options right.

But a lot of these are self-inflicted problems based on problems that we have created.

Like I said self click flick the problems we've created for our self right.

The problem we create for ourselves that cloud posse was this strict adherence to share do not share the Terraform state bucket across stages and accounts always provision a new one.

This made it very difficult when you wanted to spin up a new account because you had to wire up the state in managing Terraform state with Terraform we have a team of state back in module, we use it all the time.

It works, but it's kind of messy when you've got a initialize Terraform state without Terraform state using the module.

So it creates the bucket.

The Dynamo table and all that stuff.

Then you run some commands via script to import that into Terraform and now you can use Terraform the way it was meant to use with this bucket those created by Terraform but it's real.

And you see you see the catch-22 here.

And if you're doing this at every account is that idea.

So has she.

So grunt works took a different approach with Terry Grant and Terry Grant.

They have the tool Terry Grant provision.

The Dynamo be back in.

And the term the S3 bucket for you.

So it's kind of ironic that your whole purpose with using tenure reform is to provision your infrastructure as code and then they're using this escape hatch to create the bucket for you.

And so.

So let's just say that that was a necessary evil.

I'm not.

Know I get it.

It's a necessary evil.

Well, on the topic of necessary evils let's talk about the alternative to this to eliminate like all this grief that we've been having.

Screw it.

Single state bucket and just use IAM policies on path prefixes on workspaces to keep things secure.

The downside is yes, we gotta use IAM policies and make sure that there's never any problems with those policies, or we meet we can weak state.

But it makes everything 10x 100x easier.

It's like using.

So one of the things Terraform cloud came out with was managed state like.

And that's free forever or whatever you believe.

But just having the managed state made a lot of things easier with terrible cloud.

I still like the idea of having control over it.

We Terraform in S3 on Amazon where we manage all the policies and access to that.

So that's what.

All right.

So when you're done using workspaces together with the state storage bucket the other thing you gotta keep in mind is using this extra parameter here.

So workspace key prefix.

So if you're using the shared S3 buckets strategy, then you're going to want to always make sure you set the workspace key prefix so that you can properly control IAM permissions and that fucking based on workspaces.

So a workspace might be dead.

A workspace might be prob somewhat thank you very explaining that perhaps Teflon cleared up some confusion when it comes to workspaces.

But you said, where do you keep the state.

But if you divide that all overtime rules and policies it can be done by keeping it in a single state.

The single buffer could I should say one of the things Why we haven't liked this approach is that.

OK Let's say, OK.

And I just want to correct one thing I was kind of saying it wrong or misleading.

So the workspace key prefix would be kind of your project.

So if your project is case, the workspace key prefix would be e chaos and then Terraform automatically creates a folder within there for every workspace.

So there'll be a workspace folder for private workspace all to fit.

So there that.

Now why we haven't liked this approach is the.

So I am is a production grade where we're using I am in production, and let's say the master This bucket is in our master AWS count and we're using I am there and we're using I am to control access to death or control access to the staging workspaces or control access to some arbitrary number of workspaces while we're actually doing is we are modifying production.

IAM policies without ever testing those IAM policies in another environment by procedure like we're not enforcing.

You can still test these things.

But it's on your own accord that you're testing that somewhere else.

And that's what I don't like about it is that you're kind of doing the equivalent of cowboy.

I am in production, obviously with Terraform as code, but nonetheless, you almost need a directory server for this sort of thing.

Yeah Yeah Yeah Yeah.

That's interesting.

Is there.

I am directory integration.

I haven't looked trying to do that.

But Yeah.

So sorry once I got some comments here by Alex but if you update the reference version and dev but.

But then get pulled away, and it gets forgotten later environments come back to it's like default.

So the then coming back and being like, what if we dropped the ball on requires some fancy diff work and just tedious investigation.

I kind of want a dashboard that tells me what version of every module.

I'm referencing in each environment.

This doesn't cover everything but doing cool stuff like this is just messy, so Yeah.

So Alex.

So Alex siegmund is actually one of our recent customers and one of our recent engagements and I'm not.

So one of the problems that we have in our current approach that we've been doing, which we've done up to this point has been that you might merge pars for dead and apply those in dev but those never get promoted those changes never get promoted through the pipeline.

And then they get lost an advantage.

And that is the whole problem with what I argue is that with both the poorly repo approach that we've been taking.

But it's also the problem with the directory structure approach that's been the canonical way of doing things in Terraform for some time.

The proud directory the dev directory.

All of those things have the same problem, which you forget what's been ruled out.

So that's why I like this approach where you have one PR and you'd never work.

OK There's variations of this implementation.

One One variation one is that PR is never merged until there is an exit successful applied in every environment.

So then that PR is that dashboard that Alex is talking about.

He wants a dashboard that tells what version has been deployed everywhere.

Well, this is kind of like that dashboard when that PR is open.

Then you know that there's an inconsistency across environments.

And based on branch based on status checks and your pull request you see success in that success in staging.

And no, no update from production.

OK that's pretty clear.

Now where it's been updated versus this approach where you then merge it.

And then you need to make sure that whatever happens after that has been orchestrated in the form of a pipeline where you systematically promote that change to every environment that it needs to go after that.

But now the onus is back on you that you have to implement something to make sure that happens in Terraform cloud.

They have this workflow where it will plan in every environment.

And then when you merge it can then you can set up rules.

I believe on what happens when you merge.

So when you merge maybe it goes automatically out the staging, and then you have maybe a process of clicking the button to apply to the other environments.

What's nice about this is you'll still see that that has not been applied to those other environments.

And you need that somewhere.

So whether you use the pull request to indicate that that's been applied or whether you use a tool like Terraform cloud to see that it's been applied or whether you use a tool like Spinnaker to create some elaborate workflow for this.

That's open ended.

Let's see.

I think we've got some more messages here.

You've removed the need for such a dashboard by making part of your process ensuring that it's all repositories or environments.

Yes So I'm still not 100% sure.

OK, awesome.

So Yeah, Alex says that this alternative strategy we're proposing eliminates some of these needs for doing that because in the other model the process is basically the onus is on you to have the rigor to make sure these things get rolled out everywhere.

And especially the larger your team gets, the less oversight there is ensuring that that stuff happens.

And so are one thing I'm personally taking away from this is to try the work space recommended thing on Terraform docks first, and then you share and report back and report back.

All these pitfalls.

Yes also I am.

And to be candid, I have not.

I have not watched or listen to it yet.

And you're going to find detractors for this, right.

But that's good.

Many rich ecosystem conflicts and Anton Anton the thing go is a was it is it prolific is it forced them or I forget what the conference is he has just done a presentation on why you want to go on selling everything I said and saying, why you have to have the directory approach.

So that might be a video to watch.

Is it good.

Anton is a great speaker.

I know I'll look, I with the I actually met this guy upstairs.

Yeah Yeah.

He was there last year.

Yeah super nice guy.

He's awesome one.

Yeah, I really like.

I like him.

I met him up in San Francisco and reinvent I think this guy goes to like 25 conferences a year or something.

It's a.

So you were saying he kind of has the right combination even with zero.

That's 12 to stay with a director.

I think so.

So he is a very he's a very big terabyte user.

And Tara grant is a community in and of itself with their own ways of doing things.

So I therefore, I suspect this will be very much at promoting that because in the abstract it was something like, why you have to do this this way.

I'll find it though while this is going.

Any other questions you're I have a bit of a question that kind of is a little bit higher level.

But my experts whatever form is that when you use it, it's kind of very low level.

It's a fairly abstracted from the API.

And you have, of course, you know the built in kind of semantics that has you gives you rails as it were sort of like you know, this is how we just say transitions.

So we do this.

So we do that.

And it's kind of like you know operate inside of that construct.

Yeah What's your experience with four thoughts around using higher order constructs like what's available database TDK for example, in some of the things you could do with that in a fully complete language.

Yeah Yeah.

It's good.

I like the question.

And let's see how I answer it.

So this has come up recently with one of our most recent engagements and the developers kind of on that team challenged us to like, why are we not using TDK for some of the stuff.

Let's be like, let's be totally honest.

That like scene k is pretty new.

And we've been doing this for a long time.

So our natural bias is going to be that we want to use Terraform because it's just a richer experience.

But there's a lot of other reasons why I think one can argue for using something like Terraform and not something that's more Turing complete like SDK or blooming and that's that requirements don't always translate to awesome outcomes or products.

And the problem is that when you can do everything anything possible every way possible you get into this scenario of why people hate Jenkins and why people hate like Groovy pipelines and Jenkins because you develop these things that start off quite simple quite elegant.

They do all these things you need and then 25 people work on it and it becomes a mush of pipeline code a mush of infrastructure code.

If we're talking the c.d. k right.

And things like that.

This is not saying you can't use it.

I'm thinking more like there's a time and place for it.

So if we talk about Amazon in the Amazon ecosystem.

For example, I like to bring up is easy.

It's easy s has been wildly popular as a quick way to get up and running with containers in a reliable way that's fully integrated with AWS services.

But it's been a colossal failure.

When you look at reusable code across organizations.

And this is where Kubernetes just kicks butt over.

Yes Yes.

So in Kubernetes they quickly Helen became the dominant package manager in this ecosystem.

Yeah, there's been a lot of hatred towards helm for some security things or whatnot, but it's been incredibly successful because now, are literally hundreds and hundreds of helm charts many written by the developers of these things to release and deploy their software.

The reason why I bring up helm and Kubernetes is that's provided proved to be a very effective ecosystem.

We talk about Docker same thing incredibly productive ecosystem.

And so with Docker Hub.

There's this registry.

And you know security implications aside there's a container for everything.

People put them out there your mileage may vary and some might be exploitable but that's part of the secret.

Why doctor has been so successful.

It's easy DSL easy distribution platform and works everywhere.

Same pattern like going back in the days to Ruby gems and then you know Python modules and all these things.

This is very effective.

Then we go to Amazon and we have easy yes and there's none of that.

So we get all these snowflake infrastructures built in every organization to spec.

And then every company, every time you come into a new company at least as us as contractors you know two environments look the same.

They're using the same store tools stack.

But there's too many degrees of variation and I don't like that.

So this is where I think that the six part of the success of Terraform has been that the language isn't that powerful and that you are constrained in some of these things.

And then the concept of modules is that registry component, which allows for great tremendous usability across organizations.

And that's what I'm all for.

And that's like our whole mission statement that cloud passes to build reusable infrastructure across organizations that's consistent and reliable.

So back to TDK question and the answer that I gave the customer was this.

Let's do this.

Let's continue to roll out Terraform for all the foundational infrastructure, the stuff that doesn't change that much the stuff that's highly usable across organizations.

And then let's consider your developers to use TDK for the end for the last layer of your infrastructure.

What I'm talking about there.

And I'm not sure at what point you join.

But in the very beginning, the call.

I talked about what I always talk about which are the four layers of infrastructure basically layer one, layer two layer through layer 1 is foundational infrastructure layer 2.

This your platform layer 3 are your shared services layer 4 are your applications, your applications go for it.

Go nuts you may use CloudFormation, you know you server framework like if somebody is using the service framework, which is purpose built for doing lambdas and providing the structure other rails but for lambdas use it.

I'm not going to say because we use Terraform in this company, you're not going to be able to use service that's not the right answer.

So the answer is that's going to depend on what you wear at where you're operating.

Yeah, I really I really like that for Lamont.

I miss that from the beginning of the call, But that really makes a lot of sense because you want to have your foundations a little bit more rigid you don't want to have that much that you described earlier.

And that's where I think at a lower level the tie constructs that that Terraform gives you the high opinionation, I should say that makes sense, because you can only do so much.

And moreover you have a pretty mature kind of you used to be with Terraform it know you'd have temporal plant and then Terraform Apply could be quite different.

But I think this equipment has become much more mature at this point.

Yeah And they and they really do a good job predicting when they're going to destroy your shit.

Yeah And yeah.

And they have and they added just enough more functionality to HCM to make it less painful.

Which I think is going to quell some of the arguments around Turing completeness.

And then the other thing I wanted to say related to that is like the problem we had in pre h CO2 all the time was count of cannot be computed.

That was the bane of our existence.

And one of the top one of our top linked articles in our documentation was like, all the reasons why count cannot be computed.

Now that's almost we don't see it as much anymore.

So I'm a lot happier with that.

The only other thing I was going to add and I'm not sure I'm 100% on this anymore.

I was alone.

Well, I wasn't 100.

So I was maybe 50 60% before.

Now maybe 30, 40 but I was wondering like maybe maybe HDL is more of like a CSS language and you need something like Sas on top of it, to give a better developer experience.

But for all the reasons I mentioned about CTE came my concern is that we would get back into this problem of non reusable vendor lock kind of solutions and unless it comes from hashi core you run the risk of running afoul of kind of division.

They see for the product also.

Alex siegmund shared in the Zoom chat don't Alex keep you posted to the suite ops office hours channel as well.

Yeah, this is the.

This is the talk that the Anton Banco did at DeForest them and the recording has been posted and he just posted it is LinkedIn.

I'll look it up after this call and share it.

I do think actually though boy, this has been a long conversation today.

I think we already at the end here.

Are there any last parting questions.

No more just to thank you.

Thanks for calling me out earlier.

And then taking the whole hour to talk about her farm.

I appreciate that.

Well, that's great.

I really enjoyed today's session.

As always so lets see are you going to just wrap this up here with the standard spiel.

All right, everyone looks like we reached the end of the hour.

That about wraps things up.

Remember to register for our weekly office hours if you haven't already, go to cloud posterior slash office hours.

Again, that's cloud posse slash office hours.

Thanks again, everyone for sharing your questions.

I always get a lot out of this, and I hope you learned something from it.

A recording of this call will be posted to the office hours channel and syndicated to our podcast at podcast.asco.org dot cloud plus.

So see you guys next week.

Same place same time.

Thanks a lot.

Thank you, sir.