Here's the recording from our DevOps “Office Hours” session on 2019-12-18.

We hold public “Office Hours” every Wednesday at 11:30am PST to answer questions on all things DevOps/Terraform/Kubernetes/CICD related.

These “lunch & learn” style sessions are totally free and really just an opportunity to talk shop, ask questions and get answers.

Register here: cloudposse.com/office-hours

Basically, these sessions are an opportunity to get a free weekly consultation with Cloud Posse where you can literally “ask me anything” (AMA). Since we're all engineers, this also helps us better understand the challenges our users have so we can better focus on solving the real problems you have and address the problems/gaps in our tools.

Machine Generated Transcript

That's me.

That's right.

You know these days there was pride is very private.

Conservatives want to really make it clear you know what's going on.

All right, everyone.

Let's get this show started.

Welcome to Office hours today.

It's December 2019 and my name is Eric Osterman.

I'll be leading the conversation.

I'm the CEO and founder of cloud posse where a DevOps accelerator we help companies own their infrastructure in record time by building it for you and showing you the ropes.

For those of you new to the call the format of this call is very informal.

My goal is to get your questions answered.

Feel free to unmute yourself at any time if you want to jump in and participate.

We host these calls every week will automatically post a video recording of this session to the office hours channel as well as follow up with an email.

So you can share it with your team.

I've been slacking on those emails lately though.

So being me if you don't get the message if you want to share something private just ask.

And we can temporarily suspend the recording.

With that said, let's kick this off.

I have prepared some talking points as usual.

These are things that have come up throughout the week.

We don't have to cover these things.

These are just if there are not enough questions to get answered today.

So first thing is that Martin on our team also in Germany there's somewhere I shared a pretty cool little module he's been working on, which is how you can deploy and bastion and AWS that has zero exposed ports to the outside world.

And you can you do this using the New Features of the SSL sessions manager for shell.

Also in other news, the engineers at headquarters in Moscow are rated kind of interesting drama in our industry to unfold 15 years after the company was started.

EU plans to deprecate helm based installations.

I'm really not liking that.

I hope this has not a trend.

This makes me think of the Java installer we've had to put up with for a couple of decades here.

And lastly, I want to cover something that we briefly touched on last week with the announcement that you know the official help chart repositories are going away.

What is a alternative pattern that you can use.

That doesn't mean you have to host your own chart registry.

And I want to talk about that too.

But before we cover this in more detail.

Anybody have any questions, we can get it beyond Terraform Kubernetes DevOps and general job search.

Anything interesting or an idea.

This is Project Adam cruise towards the end.

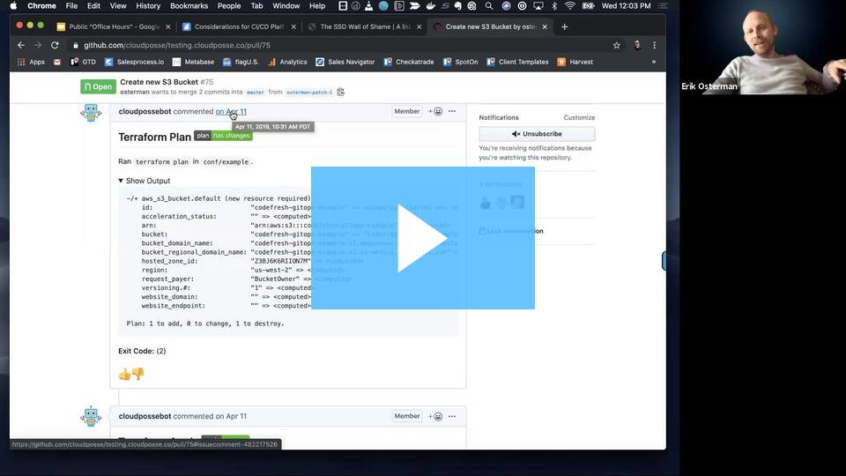

I'd like to talk a little bit about Paul request process and how to be able to get something of a bug fixes and changes contributed to some of the cloud policy.

OK Yeah, it sure will.

We can jump in and talk about that right away.

First thing.

Have you already opened something in your app.

You're frustrated that we are moving fast enough.

That happens too sometimes because we had a lot of freedom pull requests.

Yeah, I have.

I have to open right now.

And I just kind of care like what the expectations are.

For how long it should take to get reviewed or you know how long.

I should be patient and don't feel bashful pinging us in Slack to prioritize.

It's kind of like if we follow.

Sometimes the path of least resistance.

So if you nag us on the slack team.

I'll have Andre or myself or you or one of the guys look over your request and we'll try and expedite it.

So for starters can you post your PR to the office hours channel and I'll call on call attention to it.

Absolutely You can review that one with a one page deal.

If anybody afterwards wants to help me troubleshoot us certain that I'm doing GitLab install and GitLab on a career that is cluster and cert managers give me a pain in the ass.

So I can send you guys some cookies if you want to talk about it later anyway.

OK I read an article yesterday regarding alphabets move towards trying to be number one or number two in the code space.

Yeah with their timeline.

I seem to actually have a response that they may end up just putting it all together.

I don't think it was good PR for Google.

Now on the iCloud product.

Yeah, it's great.

They want to be number one or number two.

But Google has a horrible track record of it.

And thank you for bringing this up.

This should totally be a talking point.

But yeah Google has a horrible track record as it comes to pulling out of products.

We were using Google higher for example, and less than a year after we signed up and got everything in there.

They pulled the plug on that like so many other things.

So I would not put it past them to just say, hey, we're exiting the cloud business.

Sorry we did become number one or two.

We only want to be number one or two.

That's your fault. You should have made us number one or two.

Yeah like to just pull out of GCP with them.

I don't know.

But I mean so.

So very distant.

I said I read the article or something you said like their cloud business maybe generate somewhere in the $8 billion dollar per year range.

When Amazon does that per quarter something like that.

I mean, yeah, there's a big gap.

But it's still.

I mean, does that make it not even worth it for them.

That's amazing.

Yeah because I see what I see where Google does some things very well and AWS does some things very well as well.

But it's still a part of the market.

And we can actually grow it was they can actually do other things even better than Amazon.

I think what Amazon has been doing really is like the Facebook model that be looking at trends to what people are doing.

And then build a service around it.

And then they push that out as a repeat each time.

Yeah, I would love to see the Amazon formula.

I bet they have something pretty well-defined internally about how they identify assess scope ballot build requirements prototype.

I mean, it's a machine inside of Amazon how they're pumping out these services.

Yeah, that's your point, I mean, I think a lot of certain Google services.

I'm envious of the UX is a lot better.

The performance is a lot faster.

I mean GKE compared to cars.

I mean night and day difference in terms of usability and power and updates.

Yeah, I must say even like after filing a couple GTM b bucks that was super quick to fix them like I was using Google functions on the product.

I fixed the back line.

I don't know two weeks or something that wasn't really even important.

So it's like this relationship between it was not even a big company that was working on a school project like this that's I fix it in two weeks.

And I just feel like the creation ship is a lot better between Google and its customers, even though a lot of people say Google's sucks its customer advancement.

I think they've I think they've turned a corner on that.

I mean, Google is G Suite user and a Google Fi customer.

Their mobile offering here in the US.

And it's the best experience of any product that I have.

Also they're Google Wi-Fi.

I use that as well.

And that's been a phenomenal experience working with their customer support.

I talked to somebody within about five minutes of calling up their technical.

They know what they're talking about just not having to navigate 25 menu options sit on board for 45, 50 minutes.

That's what's great.

Yeah, I had that experience just recently with a guy at code fresh.

And I talked to a good experience.

It sounds like.

Yeah, you know, the get set.

And you know you get you get shown a demo and you know sometimes it's somebody who's just been trained how to do the demo and you try to deviate at all.

And they're like, I don't know.

I don't know what I'm doing.

But this guy was like, you know, all kinds of stuff you know.

You know and showed me.

I was asking all kinds of questions.

And he was going around and showing me different things.

And we were totally off script and I really appreciated that.

You know this week, we had a technical guy in front of me that it's very cool.

Do you know who it was by any chance.

I do come back to that.

Oh, yeah.

I'm glad those are a positive.

This is place I can.

If anybody is interested.

I can stick to how things happen at Google internally.

Having worked there for four years.

They're extremely good at doing stuff in scale.

In other words, things that they do in scale.

They take very seriously.

So if what you have if you need them to do something that might be somehow personal or unique to you you're screwed.

Just never going to happen.

Yeah, but if you need.

If you need something that is, in a sense part of their existing workflow you know they stay up late at night trying to make that happen as quickly as possible because they want to reduce the friction.

Yeah Yeah.

So basically, if they see that this affects 10 million people, then they will go do it.

Yes And I'll talk a little bit about what they can get away with that.

The other thing is that they have two different.

There's basically like two different categories of services in terms of support in terms of internal IQ support or SRT support.

There's the stuff that's funded or I should say the stuff that has what they call a revenue stream.

And so there's a billing idea for it.

And those things, then get different tiers of support internally depending on how much they go for.

And then there's everything else.

So basically, if in their internal accounting, they don't have a revenue stream associated with a service.

It could disappear at any minute and they wouldn't give a rat's ass.

Nobody would try.

That's harsh.

It's very harsh.

Yeah, it's totally.

Yeah And the other thing is the way they can get away with it is they do a lot of blue green deployments.

And that's basically something that I would love to bring up in this group at some point, maybe not today, but how we can go about establishing quality gates and running canary mills.

So at any given time, there will be multiple versions of female running at once.

For example, and the versions might be like unbelievably similar to each other, but they then start to collect telemetry.

You know they compare the metrics of the new whatever.

Well, this is the feature flagging is for right like launch darkly or Flagler or flipped except well Yeah, except it's more about having multiple.

In other words feature flagging.

Yes except you're comparing the flagged instances, the flag pods with anon flag pods and they're all running simultaneously.

Right I was to the beauty with things like flag or is that that's flamer trees built in.

So you can see how that's performing for you.

And then you can tie that in with Prometheus and all your other reporting dashboards.

So I guess what you're monitoring or measuring is going to depend on the product, but Yeah, that because the tooling for that is there.

I guess there's no good demos for how you should do it.

Yeah And then I think the ultimate the ultimate secret.

I mean, it's not really a secret.

It's just that they have the discipline of making sure that whenever they design something they include the diagnostic criteria, as my guide.

So if you're writing a piece of software.

And it's going to do something, then something in the world should change as a result of that piece of software.

That's a good point.

Viner you have to say this is going to affect these metrics.

And these are the thresholds that are considered good.

And then so like zero might be bad.

For example, let's say you want to tell people in the spec 0 is bad because then that automatically gets plugged into all the things that could be bad.

Yeah, I totally agree in theory with that.

I think few teams have the rigor to be able to actually carry that out.

And the and the foundation to truly report on that, well ability you're saying I like the idea that before you make a decision, you decide up front kind of what are some of your expectations that this is going to do.

And then by deploying it and made seeing that change happen.

Did it meet or exceed or not at all or did it meet or exceed your expectations or was something in kind of a similar vein that my team has been going through is that we started saying because we get people that say, hey, can you create this little app for us you know as like internal research and development type thing.

But you know we don't want you to spend a lot of time and money on it.

So don't do a pipeline don't do any tests just do it.

And so what we started saying was no if you don't want a pipeline if you don't want any tests.

It's not important enough for you.

We're not going to do it.

You so we have this new philosophy that you know everything.

It's pipeline.

Yeah nothing doesn't get a pipeline.

Pipeline driven development.

Yeah And it's so the this video that I watched from Uncle Bob.

Everybody know uncle Bob Martonne from La.

He's one of the founders of agile agile veterans.

He did a video and he started talking about how you can't go to your business and ask, can I write tests.

Can I build a pipeline.

Because what you're doing there is you're trying to shift the risk to them.

Yeah, they don't know that stuff you know, that's you.

You know that stuff.

It's your professional you know risk to take.

So he said they're just always going to say no, you know.

So you have to shift that mindset.

And you take on the risk of you know saying, I need to write to I will write tests you know as part of my professional ethics you know or whatever for this is the way I operate.

Yeah, that was really powerful.

That is powerful.

Thank you for sharing that.

I like that you have a link to the video shot and please share that.

Yeah, I will share it.

And just to add some context in context.

But add some reaction to what you're saying because we all know and I've been there.

How if you didn't do that and you go along with doing it.

Next thing you know, you get a notification.

So we're going live with the service next week.

And now it's your responsibility to manage the uptime yet none of the legwork was done to make sure that it was a reliable service from the get go.

So you called.

So Anybody have any.

As anybody first of all played around with the Systems Manager shall I. We haven't done it.

We use mostly teleport.

So I'm curious if anybody's using the systems in your shell what their experience has been.

I have not.

But I do want to hear a little bit more about before from when you use it well and managed by the authentication.

Gotcha Yeah.

We can certainly talk about that a little bit.

I have somewhere in my work a my if you to go to a climate policy startups.

So here, I think under screenshots here.

I have some screenshots of what the architecture looks like.

Portillo port, which is helpful because it is a legit like enterprise grade application in terms of complexity and architecture.

Not because they're trying to be difficult. But because it's solving a difficult problem.

So we just gotta let this load here for a second.

It's going to be a little slower since I'm logged in.

And this is not cached session.

In the meantime, for everyone else teleport is a product by a company called gravitational grab.

They actually just raised like 30 million.

So they're doing pretty well to go to GitHub dot coms large gravitational slash teleport.

It's a open source or open, core project.

Most of the functionality is in the open source version.

But things like single sign on with Oct or anything other than GitHub requires a license to do that.

So let's see this loaded is screenshots.

So teleport.

So yeah.

So just like the cool thing with teleport is you can replay sessions like with full on YouTube style replay so that's what you see right here.

This is a replay of the session.

But the architecture.

Here we go.

See this is big enough can you guys see this.

Maybe we need to make the bigger.

I think you were good before.

All right.

So there are these three components.

Basically the auto serve as the proxy service.

And then the node service.

So the node service is this service over here.

And what you do is you deploy on all of your servers.

You deploy this node service and that kind of replaces ss.

So technically it can work together with OpenSSH age or it can replace open SSH.

Then you have the proxy service.

So the proxy service here is deployed as you can see multiple times.

However, it works like a tiered architecture.

So basically, the proxy service is your modern day bastion in teleport so you expose one of your proxies like this, and you set that behind and you'll be with TLS and your ACM certs like tell that I can't 10x that I own in this case.

And that connects you.

So when you connect publicly either using the command line tool using Clyde tool called DSH or you can connect over the web like this, you're actually connecting to the proxy service here.

Then there's that.

So then you have say your clusters.

And you have multiple clusters.

And this would be an example of one cluster here.

But let's say you have five or 10 or whatever clusters all of these deploy the same stack of services that you have they have an auto service they have the proxy service.

And then they have the node service running on all the nodes you typically run like one or two proxy services for AJ one or two off services for AJ in each cluster and then a node service on each node.

So what's going on here is that when these services come online the proxy service comes online and tunnels out of your cluster to your centralized proxy service.

This ensures that all of your clusters don't need to be publicly accessible because they tunnel out through the firewall.

Then you have your order service.

So he uses the TKI infrastructure where each auth service has its own certificate that's been signed by the CIA central caa and then that establishes the trust.

So that this Ott service here can handle the issuing the short lived certificates for clients on age.

So let's see here.

I know.

So I know enough to be dangerous.

I'm not deeply technical and teleport because it is a sophisticated product.

Other things to point out here is that each service here writes to a Dynamo table.

It's events.

So you can have an event stream of what's going on there.

And then you can tie that in with lambdas like Lan does that send slack notifications and then all the sessions.

These are your TTY sessions.

Those shipped out to an encrypted S3 bucket.

So a lot of companies have tried to h logging and they will send those logs to service like single logic, not to single anyone out here.

And that's cool from like one perspective discoverability having it all in one place.

But it's not really effective if people are using TTY sessions like going into them more using top or running anything that does like ANSI codes to reset the cursor position and all that stuff, because you're not going to see what's going on when you look in those regular log files.

That's why you want YouTube's out playback and that's why they send a binary log of the session.

What's happening to this encrypted S3 bucket.

So deploying this.

So there's the way we've done it and the official way to deploy teleport and there are pros and cons with both strategy.

So let me talk about the official way of deploying teleport.

So the official way of deploying teleport is that you should not deploy it inside of Kubernetes.

It should be deployed at a layer below your container management platform.

So basically on the coast.

And that means like deploying it.

So it runs under System.

And the reason for that is that this is how you treat issues that could be even affecting Kubernetes itself.

So by deploying a layer underneath Kubernetes gives you the escape hatch you need to try things going wrong with your cluster.

For example.

The downside is that introduces more traditional config management strategies or dependency on that or you need to roll your own images with Jetpack or something that have those binaries built in.

We've kind of taken out a compromise to this where I could possibly deploy this as a health chart and that allows us to use the same pipelines and tooling that we have for everything else we do.

And we deploy the odd service that way the proxy service that way.

Using health charts.

And that also means that Kubernetes can manage the uptime and monitoring of those services and means we can leverage Prometheus with all the built in monitoring we already have in Kubernetes without adding anything custom.

So there's a lot of other benefits to deploying this.

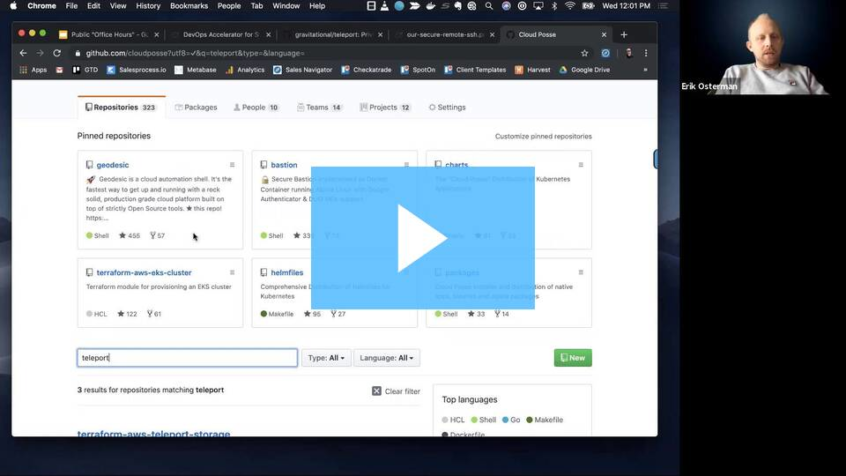

Also inside if you go to cloud passes get up.

So GitHub slash cloud posse slash Helen files, you will find our helm file deploying teleport under Kubernetes.

But keep in mind that teleport like so many other things don't just run self-contained under Kubernetes they have banking services that they need.

And that's why if you go to GitHub cloud posse and search for the keyword teleport under our repos you'll find the Terraform module that has the backing services teleport so teleport.

So Yeah.

This is the module that you might be interested in if you're going to deploy this.

So this deploys the S3 bucket following best practices in the DynamoDB table following best practices.

So that makes everything else capable of being deployed basically inside of communities.

Let's see what else are Docker image.

Don't use this doctored image.

It's not been updated.

As you can see share this to.

Were there any other specific things you wanted to see.

So that was just a high level over.

I can go deeper or more technical if there's something in particular, you were hoping to see on that.

So you would major one from the start.

Yes Yes.

Yes Yes.

OK Cool.

So So yeah, we used that we deployed with the enterprise offering the enterprise offering enables G Suite integration for example, or Oct integration and then you Mac, then you use.

Let's see here.

How easy this is to show.

If we go to.

So we have our own charts for a teleport that we manage.

These are different from the official charts.

Jupiter there is more than that yet teleport enterprise off teleport enterprise proxy.

So in here, you can see that we provide some example.

So I'm making the assumption that one side provide access to a user.

I can also restrict this restrict them to specific resources.

Yeah So you map basically the role that is passed through the sample or so.

So when they authenticate it passes the role.

And then you can map that role to a role inside a teleport.

You can configure all the roles you want.

So then teleport you can configure like that.

This bill allows root access to this cluster or this role allows root access to all clusters.

Now you couldn't you couldn't make it more fine grained like read only cats that read only as a concept that only exists inside of the operating system itself.

And this is h doesn't govern that.

So you can control what it.

This is h account, they can.

I'm trying to remember C I have not been doing the deployment of teleport.

This is a Jeremy on our team.

He is the expert itself.

So I'm going to have to plead ignorance here on how that role mapping is setup.

I believe it's deployed through a config map somewhere.

Here the files would that be helpful.

All right.

Have you given more thought how you plan to deploy teleport.

Not yet.

I'm just tired.

OK, here we go.

So sort of playing it with Helen file.

And then we define these templates here, which are the configuration formats of the chart was similar to t descriptor.

Guess we don't have any examples of those in the public thing here.

Yeah Sorry.

No, we don't have a well documented explanation of how that works right now.

But if Yeah, I can that name Jeremy for some more information if it comes up.

Cool any other questions.

Sorry Dale I can answer that question precisely to get you.

Many other questions.

I mean, that was it regarding that.

Yeah or anyone else.

Any questions.

See you.

What were some other talking points.

Anyone have any commentary on the whole engine X-ray.

That happened in Moscow.

They sent out an email.

I heard it thing kind of worked out OK.

Yeah, good.

Yeah, there was an email that Nginx sent out to all their customers or something.

OK Yeah.

OK That's cool.

Yeah, I got to look that up, I'm sure they were like full disclosure.

This is what happened.

You know.

But nobody was arrested.

Bubble blah.

OK So nobody was arrested.

I'm just thinking two guys very well that in the original reporting it said they were brought in like you founders of engine x were brought in.

That's what it said in the original.

But you know maybe that was just hearsay or secondhand and not confirmed now.

I found it along the tweet creflo given how much of the internet is underpinned by this.

I was worried about it.

I'll post a tweet that I found that talks that that post a screenshot of the email.

OK, now we always strive to be transparent with you or valued customers and we're committed to keeping you updated.

OK enforcement.

All right.

Yes, they were just brought in for questioning the founders.

They were not technically arrested.

They were brought in for intimidation.

All right.

Could something like this happen.

Yes What was that code.

Something like this happened in the US like because of copyright infringement.

People get arrested.

I mean, if they want to they could do whatever the hell they want.

You know what.

What did happen in the US.

No probably not.

But the Patriot Act and stuff.

I mean, they could lock you away.

OK The good thing.

Again And yeah.

And if it's a Hollywood movie they might bust down your door with a SWAT team because you might be a violent offender or something.

I don't know.

I mean, this whole thing reminded me of the pyruvate right in 2008.

I think it was when they raided the data center.

Was it later.

I think 2007 2008.

So yeah, I mean, it's a you it could happen.

I think.

Like people could get arrested for it.

Remember Kim and the raid on his mansion in New Zealand.

That was pretty insane.

So if one paramilitary whatever planning Margaret Mead did the same thing with his mom as well.

They actually believed.

Oh, they did Kim Dotcom mom.

Yeah well made it personal.

Well, so I don't want any of you using a CEO we've started using it with a customer.

That project was delayed because we kept on waiting for the helm chart to become more stable.

And we also saw that they started a new escrow installer for Hellman project repo.

But then Ryan in our community called out this thing to my attention, which kind of bummed me out.

I don't know if it's true or not.

They said that they're going to be deprecating helm the helm installation approach.

So I get it.

As a developer kind of why they're doing it you know they're frustrated with some of those limitations.

But I don't like it in terms of the trend at this.

This makes me feel like the modern day Java installer where you can't just apt get install the regular Java you have to go sign up.

Give your email download a jar curl it or type it into bash agreed to some terms of service.

All this other stuff.

Is this the future is you.

I hope not.

Well, that's not even the case anymore.

I mean, I could just go say you know brew install open data.

No Yeah well good.

Open it.

Right but that's not the son version.

Well, so I'm hoping that there'll be an about face on this decision because I do like installing everything in a consistent manner using it.

I don't have a huge problem with it.

If it's providing other like if to do it with helm required more than just helm install it still if it required more than that, then it makes sense to wrap it with something so that it can just be you know Estela seitel installer.

The CEO.

Right I mean, you want to make it as easy as possible for your users.

Yes, yes, and yes, you do want to make it as easy as possible for your users.

But sometimes these are at odds with each other.

We had this conversation last week about home and how I think you actually brought up that you are disappointed that a lot of the home charts are insecure by design or insecure a box.

And I think their goal there is to make them as easily install as possible.

Does odds that make as operationalized production eyes as possible.

Oh, yeah.

I mean that the people behind this trio in particular tend to buck the norms anyway, so like another perfect example is you will not find in this video channel in the Kubernetes slack.

They have their own.

So that's just the way they operate.

At the same time fluently and blinkered and all the others mean, that's an issue and specific.

Yeah, I want to hear from them.

For me, the only issues that I see is like how do you make this replicated all across multiple clusters.

Like for example, you have a Def Con so you want to cancel this show on it.

I mean, calling a CEO, I command works.

Is it like some way to go for now.

Exactly I mean, it's basically like using the aid of US clock command right.

And we all know.

But we're not used to it obvious like manage to define our infrastructure right.

That's why we have tools like Terraform.

That's why we have tools like help.

So how do we I guess I'll be OK with it.

But then they need to provide a declared way for me to describe the state of my CEO and make it a you know if they can make it like it still apply and still destroy and Yeah, that, then I guess I'll be OK with it.

That said, I just don't feel right.

It doesn't feel right.

Yeah something doesn't feel right.

And so you know, we've been a big proponent of health file and health file is a terrible one.

But that's why I'm so happy today that there's a health file provider.

Now that's helping us integrate health file.

It's our chair from workflow.

So does that mean, we're going to need to have a CEO provider for Terraform maybe or something like that.

I mean, I would like to know the reasoning was.

I did it.

I mean, it's something that really is like I cannot solve flip with home and home sites.

We don't want to solve this case.

I mean, the only thing that I could think about as a CFD that I kind of an issue.

Yes, dear these are kind of poorly supported.

Underhill due to not if somebody could explain better than me that go for it.

It's basically like the race condition between the CRT getting deployed with the containers coming online and waiting for those resources to be functional isn't supported by health.

So you get these errors that like this resource is doesn't exist.

Well, it's really just in the process being created.

I think that's the gist of it.

I definitely encountered this problem.

We the way we worked around it is.

We just I think I'll hook exactly you know on file hooks with I think calling kubectl apply right or on the map.

Yeah, there's just some issues when you try to have a resource of a specific type.

And that type doesn't exist yet or it's in the process of being registered.

There's something going on.

And it's annoying, especially with spot manager.

Yeah And there's still need to have their customers here it is.

These days.

Yes So I know I know this affects me most as well.

And I know he's been contributing some powers to fix some of these problems.

But I don't know if the root causes to have been addressed.

I thought I saw something in a change like a while ago, a few days ago.

But that.

Oh, really cool.

See if I can find it.

Yeah be sure to officers.

If you see the steel city on manifest apply for you're going to want.

Oh, nice.

OK So they are doing something along those lines.

Yeah, they'd be stupid not to.

Cool Yeah.

Well, one of the last things I wanted to cover briefly was I know many of you are using help and could be impacted by the shutdown or the eventual deprecation of the official charts repo.

There are a lot of charts out there.

And I don't necessarily want to have to figure out where their chart registry is or make sure that it works is up to date.

Very often you'll find the chart registry.

You'll find the chart.

But they haven't pushed the latest package up and you want to use it.

So we've been there.

There are a few of these plugins out there that add the get protocol support to help.

So you can just use like a get slash scheme.

This is the best one though, that I found and I forget his non GitHub name, but he is.

He's in suite ops as well.

And a hell and file user.

So this project together with helmet file works.

Literally point to projects anywhere on GitHub.

So let me see if I find it console slash in this repository.

Find an example using its Yeah.

So here's an example of the external of the chart of the chart repository we're pointing straight to a GitHub repository and pinning it to a committee.

So this combined with helm file makes it pretty awesome because you can really deploy from anywhere.

You can also deploy from the local file system.

If you want to works with help 3.

Good question.

I haven't tested it yet.

We'll be doing more home 3 stuff in our next engagement.

I've been playing with help 3 on home tile.

How is it working for you.

I am in the initial development phase.

So I haven't deployed anything, but it looks like it's working just fine.

I like it.

I've got work mind templating glinting everything's going fine Woo.

Same here.

Looks great.

OK, perfect.

And you are using him file as well.

Now Entered Yep.

Yeah, so we're working on where we're going to come up with repeatable like what we talked about last week.

We're taking the Terraform route modules approach to helm deployments as well.

So we've been asked to come up with a highly repeatable way to deploy a lab or Jenkins or center cube you know just spit them out all over the place.

And each time they get deployed they need to be fully production ready you know.

Yeah And so that's like the route modules approach where this is our route module and the route module in this case is going to be a good.

It's coming together.

Have any of you helpful users kicked the tires on the helm file provider.

Not Yeah.

We've been working with a little bit.

It'll work just fine if you're not using Terraform cloud.

But if you're using Terraform there's some challenges.

The idea is to have everything from the cluster to the apps are on the cluster all and Terraform.

So yeah, for at least everything up until like you're up through your shared services.

Not not necessarily using it for application deploys but using it for things like GitLab deployment, for example, to use Andrew's example, we don't get to use that to deploy you know like maybe cert manager to the cluster we defined the cert managers.

All that stuff.

And help file because that's how we have it today.

And then use Terraform to call that I know it's just entirely.

It's because of the dependency ordering.

So you can tie it altogether more easily.

But yes, it is layers on here a layer is it.

And I think that could be a good.

Next step that would be easily something that would build upon like those root module helm files that we're doing now.

Would just call the home file and we did something.

So I work for a government systems integrator and so the government has been for the last year or so, putting out their RFP is with tech challenges like hands on style meetings where you have to your team has to go into their office and they sit you all down.

And they hand you a piece of paper on it with 10 user stories or whatever.

And say, OK, you have six hours to go and they hand you keys to an empty AWS account, and they expect are running at, with full infrastructure you at the end.

So you have to come in with your infrastructure as code and everything all ready to go.

So we built a rebuilt like what you were talking about with Terraform and helmsman.

And it worked pretty well.

It was not very customizable it would just it would do its one thing and it did it fine.

You know.

But like if you decided, well, I don't need Jenkins.

I'm going to use something else instead too bad you're getting Jenkins.

So our work that we're doing now is kind of expanding on that into coming up with ways to say, all right.

You want to Cuba in this cluster do you want it.

You want it in the US you want it in Azure you know and then OK.

You've got your Cuba cluster do what you want in your Kubernetes cluster, you want you know GitLab you on Jenkins do you want a Server Manager Bob Barr you know and that's kind of where I have to ask you a little bit more about that project later on.

And I have a question on that as well.

I think more in general with using RBAC with eight of the S and I am we guys done that successfully and with ease Yeah.

I mean, it's right now.

You know we haven't done anything super fancy with it.

It's you know when you stand up.

Yes it.

It's you can map users or rolls to rolls inside Kubernetes.

So I can say anyone who has assumed this role in I am when they log in Kubernetes they are this role in Kubernetes.

So we have a role in I am called Kubernetes master and when they log into Kubernetes they get assigned cluster aban.

Right Yeah.

So it works fine.

Are you just managing everything on the I am side not with the it off config map or do you also have items of that conflict map as well.

Yeah the US off config map, you need to add what the real name is.

But not the use this rule that you are assuming.

No just the role.

You don't need.

I mean, you can map users.

But we think that's done like you know because you want to map the role.

Yeah And then later on.

And I am you get you map you know who is allowed to assume that role.

So Yeah.

So you can see them.

For example, in a group that actually has that rule attached to it or something like that.

Yeah OK.

Yeah but all right.

So do you then have to provide them the who config with that roll assumption in your post.

No we give them the ability to generate the kubeconfig so AWS chaos update kubeconfig will generate content with the roll associated to that user.

It's a flag.

It's a dash dash roll or an arrow.

Oh oh link it sounds good.

Yes, please.

Yeah, that's where I got a bit hung up with allowing them to just use the end of this while angel.

Yeah, we started out, we started out passing the Kip config around all over the place and quickly realized that that was a horrible way to do it.

Yeah has anyone been using the new farm gate on cars.

I haven't.

But looks interesting.

I'm not sure what to expect.

But I think we have a tear from my book for it, which prototypes and tests it.

And you can look at that.

There's a lot of limitations to using it.

And I think it's a little bit early probably to use it.

You'd like that's more the implementation.

I like that dp has done with their own GKE.

It takes care of a lot of the just management headaches and I just can start.

My applications with databases like I'm actually building my own house and I like the bigger things.

I'm going to go out you forget about or some dependencies that just get overlooked you know.

Oh, yeah.

Cause like I'm sticking with our back.

For example, I can implement it very quickly with Katie without an issue with it always is a little bit more work.

Yeah, everything's just a little harder like what Katie.

There's just a checkbox to have right.

I mean, it's that kind of stuff kinship shouldn't link with me.

I appreciate it here in the office hours.

Cool right.

Yeah here's our module and here's the first pull request.

And I Andre links to the but the you know, some of the limitations here.

But just to emphasize what those limitations are.

I just copy and pasted it in here.

So you can kind of see what some of those are.

So it doesn't support network load balancers right now apparently.

And what are some other ones.

Yeah, you can't read Damon says things that doesn't really make sense anymore.

I guess since there's no traditional concept of a node in that sense.

So what have you decided you'd like get a dog.

A deployment.

All of those.

Well, Yeah in 30.

Yeah, if you need to use data to log for data.

It's not for you, right.

It's for the people who are running temporary workloads.

It looks like somebody you could just run jobs on like.

Yeah Yeah.

No like if I were like.

So we run.

We run Jenkins and cabinet is now.

And we run.

If some miracle worker pods like I could easily see us using something like fa gate to run our and federal worker pods without having to worry about the underlying cluster because they don't need any of that stuff.

They don't need a load balancer they don't need you know they're just compute you know it's you and RAM that's all they need.

And it looks like all that far.

They can provide it.

Cool well, we're coming up, up to the end here.

Are there any other questions to get answered before we wrap things up for today.

Well, I'll take that as a no.

Hi, everyone.

Looks like we reached the end of the hour.

And that's going to wrap things up for day.

Thanks for sharing.

I learned a bunch of new things today as usual.

As always, we'll post a recording of this in the office hours channel.

Feel free to share that with your team.

See you guys.

Same time, same place next week.

All right.

See but.