1 min read

Here's the recording from our DevOps “Office Hours” session on 2020-01-08.

We hold public “Office Hours” every Wednesday at 11:30am PST to answer questions on all things DevOps/Terraform/Kubernetes/CICD related.

These “lunch & learn” style sessions are totally free and really just an opportunity to talk shop, ask questions and get answers.

Register here: cloudposse.com/office-hours

Basically, these sessions are an opportunity to get a free weekly consultation with Cloud Posse where you can literally “ask me anything” (AMA). Since we're all engineers, this also helps us better understand the challenges our users have so we can better focus on solving the real problems you have and address the problems/gaps in our tools.

Machine Generated Transcript

All right, everyone.

Let's get the show started.

Welcome to.

Office hours.

It's January 8th 2020.

Can you believe that.

I just can't get over that it's 2020.

My name is Eric Osterman and I'll be leading a conversation.

I'm the CEO and founder of cloud policy where DevOps accelerator we help startups own their infrastructure in record time by building it for you and then showing you the ropes.

For those of you new to the call the format of this call is very informal.

My goal is to get your questions answered.

Feel free to amuse yourself at any time if you want to jump in and participate.

We host these calls every week will automatically post a video of this recording to the office hours channel as well as follow up with an email.

So you can share it with your team.

If you want to share something in private just ask when we can temporarily suspend the recording.

With that said, let's kick this off.

Here are some talking points.

I came up with.

We don't have to get to all of them.

It's just things I want to bring up and first thing is that we now have a syndicated podcast of this Office Hours, which is really cool.

It's so easy to do these days with things like zap gear, which relates to one of the other talking points.

The next thing is we also have the suite ops job board.

There are a lot of companies hiring that are in our suite ops community.

Our goal is to bring everyone together and that this falls in line with that.

So if your company is hiring for DevOps or something adjacent to that.

Let me know and we can post that to the suite ops job site.

Totally free then searchable slack archives.

So if you are in our slack team, you'll know that we are a free team.

So we're limited to the 10,000 messages but we do an export of that data and we posted to the archive, which is archive that sweet UPS and we've just invested in adding Angola to our search index to that.

So it's a lot easier to discover the content.

And we'll continue to invest in that and some other things.

The other thing is that public service announcement go into this in a little bit more detail later, but basically, AWS has announced that the s.a. the rootsy a search for RDA straw raw and documents will expire on the 5th of March.

I expect like a little mini Y2K kind of bug therefore, companies doing cert validation an arduous in March and then Andrew as Andrew Roth on the call.

He is not well Andrew had asked.

So we might push this.

He doesn't show up.

He was curious about how we are doing some of our slack automation using zakir as a pure enemy, which it is.

So I can show that.

And if we have time.

How we use CloudFlare is kind of like the Swiss army knife to fuse sites together inject content and do all kinds of magic with workers.

All right.

So with that said, let's get the open the floor up to anyone.

Anyone have any interesting comments or problems that they're working with.

Hey, everyone.

This is Brandon.

Long time no see it's been a few weeks since I've joined.

Just a quick question for his for people.

Does anyone have an that they can recommend.

I'm looking at our annual PCI external scans coming up and looking for a recommended RSV.

Anybody has one or some people.

And the PCI community, I can probably ask some friends if they have recommendation.

Yeah, just kind of looking for somebody you know trusted and decently well-known.

Yeah which area are you here in Los Angeles.

Well, I'd be happy to make an intro to any ocean.

They've helped out some of our customers and have been very pleased with their services.

It, man.

Brian Neisseria.

I'm seeing you join for a while.

How's it going.

Yeah, I always come to the country in December, I spent time with family.

Did you.

Where were you able to get to the bottom of your issues with Kubernetes and the random pod restarts.

I think the actual cops work or know to be starting oh, actually yes items cops work for nuts restarting correct.

Yeah still I can't quite pin it yet.

Nothing in the logs.

Nothing in the kernel logs.

It's weird like you'll just see like the Cuba logs in the kernel logs like they'll just go.

And then they'll have like one minute of one minute of nothing in the logs and then they'll just say reboot and then have like the new boot on the walker no nothing.

And easy to the system status checks everything good.

It's interesting just because like, we cannot find any logs that show why the reboot happens, we just can't see when it happens.

Did did you go about implementing those resource limits on the pods.

I did.

Yeah So now everything on those boxes have resource limits.

Limit on requests.

OK And Yeah.

Especially on memory.

And now are you tracking how much memory is being consumed on those boxes and can you see it reaching the upper limit.

So it does reach the upper limit.

Sometimes per pod.

Well, I haven't quite figured it out or I haven't quite tuned perfectly.

It is.

We have the historical historical data on not how high a part can go in memory, but now the question is, at what point do we say this pi is API We should kill it vs. this pi does need to use this in our memory for this time being, we should leave it up, because I don't want to add the limit be too low to the point where our clients are running into issues in the app because the party's getting killed right.

You don't want to artificially reduce the amount of memory to the pods to satellite.

On average, it will consume 1 gig of memory sometimes.

But historically, the data shows that sometimes these pods can go up to 5 gigs in memory.

Do I cut it off at 5.

Or is them going to 5 mean that something has gone wrong on that part.

That's a hard one to answer.

Does anyone have any insights on this.

Have you said your workload is it.

Well, welcome to a what kind of workload is it.

Yeah, this is just simple IV application.

You get on with like what kind of data those and all being is it it spike because users are uploading 5 gigs of data like some.

Yeah, we seem to have like the dependent.

So the arbitration dies does stories like Excel files and some of the files aren't started S3, but like a story like a modified version of the Excel file in the database.

So there are probably large amounts of data being sent up periodically, but not consistently.

So that will cause the spike in numbers.

I mean keep if you haven't already found a correlation in the metrics, then keep tuning the metrics until you find one.

Here's what I would say that it's a good strategy.

So going back to what you were saying though you don't know where to set that limit.

I don't know.

I don't know what the impact to the business is by following the advice.

I'm about to give.

That's what you're going to have to decide.

But what I would say is set the let's set it at what seems reasonable on average and not for the peaks.

Allow some pods to crash OR gate kill the reaper every now and then for the purpose of seeing if this address is the stability of the cluster itself.

If you're problems then go away that you aren't killing the servers.

Well, then at least you identify the problem.

I think it's better to not cause a reboot of the entire server than to lose a pod every now and then to know memory bursts.

Yeah, exactly.

Did you set up.

Do you have the Kubernetes autoscaler setup.

No, I don't.

But at the same time, these are these nodes are already massively under scaled in my opinion.

Oh, yeah CPU usage is at 40% And memory usage is at about 50, 0.

So it's like these nodes do have enough resources because my only concern is that when you set the memory limits that.

Now, you might be constraining pods from being scheduled.

I guess the limits are if the limits are too high, that will constrain them from being scheduled using.

Yeah if the limits are too high and you don't have free resources because it's actually the op the requested limit.

It's actually allocating so that that is reserved and can't be used by the parties.

That's the request.

Yes the requirements are not the limit.

Oh, yeah complaining that too.

Unless you have a limit ranger that has a max limit, then the limit shouldn't I have two requests that lower, much lower or and then the limits higher much like the request I have to attend to the average memory usage of a pod.

And then the limits.

I have it to the peak.

OK on the topic of pods I have a question that I've asked in suite ops.

I'm curious if anybody has run into this.

Just I have no 1800 pods on those of a specific type running on 60 nodes.

How do you get those pods to be distributed close to even the on each node.

I feel I've seen something.

Basically you're looking for something that does rebalancing and I'd have to look that up.

I don't remember off my head my issue being like, I have a pretty large difference in memory requests on some node versus others.

Some notes are up to the 90s, and then some notes have 40, 50.

So it's like I want to even out.

I don't think I need to scale up more nodes I just need those nodes with 90% 95% memory to give those type of pods to denote with 40.

I mean Cuba is humanity's tries to do that automatically, but for new parts right.

Not for existing pods is it.

I don't know.

Also I mean, if it falls, it won't kill a pod unless it's told to unlock you know if a pod is Mayor happily running.

And you know unless you have some other functionality that is going through and saying, I want you to go and go around and start rescheduling pods.

By default Cuban edit once you schedule a pod.

And it's there, and it's running on a node.

It's going to keep running.

Yeah if that pod dies and gets restarted there's no guarantee it's going to restart on the same node that it was before.

So one thing one thing that I'm really interested in that, I haven't tried yet myself, but I'm I'm incredibly interested in doing for two reasons.

Number one is what we're talking about with the efficient bin packing of pods across the nodes.

But the other reason being the enforcement of your immutable state is automatically restarting all pods in the cluster, or you know whatever pods you have in a white list every x number of hours.

I was talking to I was in the Ask Me Anything section that Nick's salon did for the DVD and they're doing it in their deficit gaps.

You know, high security clusters that they everybody gets restarted average every four hours.

That's very interesting.

I found not fire right now because I'm too lazy to write a prompter across the grease scopes helm shops on startup.

So I just kill it every couple of minutes, hours.

Well, we're using for that.

It's just time off.

OK Oh, yeah, you're at.

That's a smart trick right.

So what you see is your entry point script if you just add that it just add the timeout command.

Then you can have your pod automatically cycle itself.

This is optimistically right that, of course, they won't all do it at the same time, which is unlikely but good luck.

And also, it's a hard kill of the process.

I imagine.

So I give you all the existing connections.

So we'll just go, Yeah Yeah, it's not great since long timeout is actually six term.

Let's see.

Oh, OK.

So you can actually kind of let in the and past.

Yeah you have handles the sig turn gracefully.

Let's go.

Also I'd say I shared to office hours.

It looks like there's a project out there.

It's called scheduler and it will wake up when it thinks the clusters unbalanced and start killing pods off.

So that they get scheduled I would argue that that is not super necessary.

Because if I mean, yeah, sure if a node is getting full but everything's running fine.

So what Yeah.

More or less.

So right.

So if you have an overloaded node overloaded being a subjective term.

But let's say it's running at 80% capacity everything's hunky Dory, and then Brian's cluster.

This one pod gets that request that causes the memory to spike 5 gigs.

That's what he wants to avoid.

So if on average, it's more balanced.

I think the probability of that causing problems is less and we're assuming that that misbehaving pod doesn't have a limit on it.

Well well now they do.

Now it could still be all year.

And 90 like 99 or 96 percent.

Memory requires you don't have much of a memory left at least for me, I have one or two accidents.

Yeah, I mean so not like it's not like all my notes are the 99 96, 97 percent.

But some of them are.

But then there are on the other side, there are other nodes that are at 30% 40% request.

So I want to be able.

Like I want to get to a point where obviously all of them are like around 80% memory requires a 20% pool of memory for when you do spike up to five peaks in memory.

I do see these spikes but very short.

So that's two I 80% is too hard.

Yeah, I think 8% to my clusters are like 40% memory request, maybe that memory.

40% memory usage memory recall.

I don't know.

I'm not sure what's a request sir.

So for me, the I have nodes between 30% request memory request and 99 percent memory request.

I'm struggling to get them all to like 70 80% memory replace actual memory usage on each node is about 40% 50% actually.

No, I take that back 50 60% I do think this is good.

But I would say you know to make a general statement, I would say, don't sweat it too much.

You know unless things are going well.

Oh so.

Because I have these nodes that just reboot every week and a half randomly.

I have AI.

This is my best theory right now is that every single time.

There's no limits their memory requests are really high.

It's in the 90s every single time.

OK So I mean, because I can't find anything in the kernel logs that Cuba logs.

This is my best bet right now.

That is it's kind of why I've gone down this rabbit hole.

I'm not 100% sure if this is the reason why it's happening.

But it's my best bet right now.

Just because I don't see anything in the logs the other interesting thing just a theory.

But I'm not sure if it would help would be if you did have the autoscaler enabled.

What you will see is nodes more frequently getting replaced.

So that would automatically also impact the rebalancing of pods across the cluster.

Now that may or may not alleviate the problem.

For example, if you're using spot, like the service you would be replacing part nodes all day long every day, throughout the week.

And that would also keep the OS fresh on all those boxes and the pots continually getting rescheduled I have these ephemeral clusters.

So like some of these easy to notes or four or five days old, and then they'll reboot.

So it is.

Previously, we had clusters that were up for longer, maybe three, four months and we'll get that maintenance reboot, which is on the hardware.

But you'll see that in my cloud watch.

You'll say that you see two system health, whatever or whatever our system size check cause a reboot.

This is like a service level reboot.

Not easy to host reboot.

Any other questions related to Terraform DevOp security.

AWS I've been doing.

My good friend.

I'm going to start digging into the AWS SEC s web example a little bit on preparing a eight hour training session for there.

And perhaps the next.

Once I dig into it a little bit more.

I have questions, but I didn't see an ek as examples.

That's why I just swing straight to VCs one.

Oh, we definitely have examples of all of that stuff.

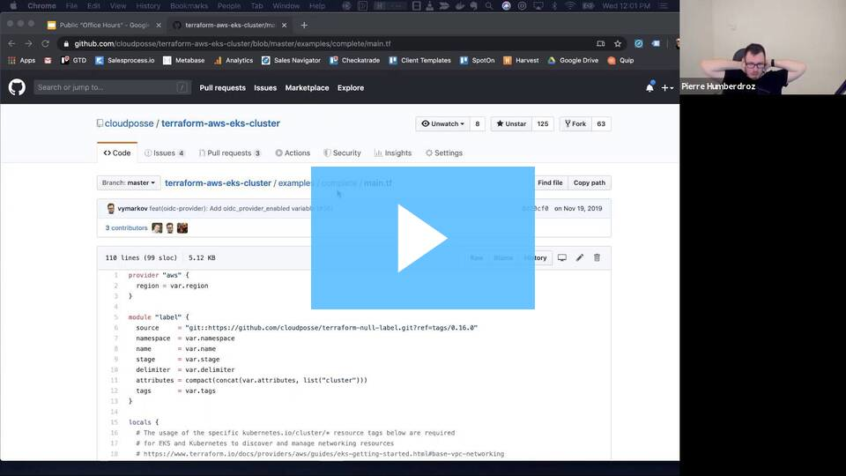

Let me show you that a full screen using this goes for pretty much all of our modules especially those that have been upgraded to Terraform zeroed out.

Well support.

Yeah, that's kind of why I'm not using my packages because I don't really want to bother with China.

And yeah.

What better way than to also start contributing to.

So like here.

Let's take an example, at the UK case cluster.

So one of things we've done is under test and source we've implemented some basic terror test examples that bring up the cluster ensure that the nodes connect.

And then tear it down when everything is done or if it fails.

So here you can see like the logic for waiting until the worker joins the cluster.

This has enabled us then to accept all requests much faster for the modules.

Now, if you go into the examples complete folder here is where we have.

This is not saying this is a reference architecture is a minimally viable example of how to get the UK s cluster up.

That should be sufficient for your class.

So as you know, our modules are very composed tables.

So then when you first bring up the BPC with provisioning subnets then we appreciate the workers and the UK cluster and then the this worker node pool is then connected to this cluster of a suite.

I'll take a look into this.

It's I'm not sure I want to overwhelm my students with all of that at the same time.

But I definitely want to reference if you are interested in using Cooper that is the kind of go down.

Yeah communities.

A lot of complexity there.

And I mean now as it relates to yes, I think they are easy yes implementation is more on the advanced side of how to do.

Yes and isn't it like the Hello World example of this.

Yes So just keep that in mind as well.

I know I'm kind of unsettling our module there.

But the point is that our modules are designed for advanced use cases and have a lot of degrees of variation configuration.

Yeah, I agree.

It can be tough.

I learned yesterday a module.

But I end up writing my own after that, especially like with the test definition that you have.

It's kind of funky.

I hope you do you guys do it, you do a module.

So it might be better to just let them know that this is a complaint.

I have about using ECM s in general, especially with CI/CD.

The fact that you need to use a heavy handed tool like Terraform to deploy a new task.

It's like the wrong tool for the job.

Yeah, I had quite an experience.

Yeah, I work with CEOs deploy, which is a Python script for the front, and it just like don't deactivate your old tasks.

So Terraform farm sells things that they are active in and won't try to modify them.

So yeah, the trick I did for OCD is not ideal.

But it works.

And this is why I fight Yeah.

So this is one of the complaints I have with these.

Yes And Terraform it is that deployment part in this is this would be true if you were also choosing to use Terraform to do your deployments to Cuba natives, but many of us do not use Terraform for those deployments and therefore, we don't have that same challenge.

And the thing is, it could be quite easily solved by Terraform.

The only issue they do is basically, if there is an inactive instead of taking the latest task definition, they just stick to the one they have.

And if it's inactive then consider it dead instead of like looking up inside all of that as they finish an on line and then just taking the latest one.

So I think that's a sharper strategy strategies that it could take that would be nice.

Yeah, I appreciate yells help on that.

And definitely will be checking back in once and start digging into a mine.

Yeah, feel free to ask away in the Terraform general, if you blocked.

I'm sure our Savior Andre will help out.

So then the vacation time I got into an argument with some friends that needed some help.

This community.

This cluster and they wanted to scale their services.

Some tons of close stuff and based on latency and I was like straight extending a latency something that I've never done.

And would never do.

And then we I. Why is this a bad thing.

And I just wanted to know how do you scale.

Like imagine provisioning and HP.

How How do you scale on which kind of metrics.

I guess superior memory is the most common one.

But I think latency if you have it don't seem at to the database.

Exactly I mean, that's why I was like, no, not good.

But they were pretty light persistent on it.

I was like, that's something for an alert but not for getting your pompous Yeah, I just would like to you input because I mean, I haven't.

Well, I guess they are going to open and we decided to play something out.

But I mean, it's kind of like, oh, did you guys like that.

I would just like to know.

First of all, the arguments you have during the holidays are very different than the arguments I have during holidays no politics none of them different kinds of family discussions.

Now you brought up the great point right.

Like in an academic setting like devoid of reality and all the other things that can go wrong scaling a latency seems like a great idea, except for in reality, it's usually because of all these upstream problems that you have no control over.

And like it's the data we a down your latency goes up.

So you scale out more workers, which slammed the database even more as it slows down even more.

And then you have a catastrophic failure.

Everything falls down.

So I think that when you scale or how you scale it.

This is a cop out.

I hate to say it.

It depends a lot on what you're building UK sees a TensorFlow right.

Let's see.

I thought that kicks of memory allocated to them.

I think it was for use.

So it must pretty hefty parts.

Something's wrong.

So let's see here if I remember this correctly.

I was a year and a half ago or so.

We actually we did a project with Caltech and using cops and EPA and TensorFlow and a have a reddish q with all the tasks in there leave or the images or something that they needed to process and they then rotate custom HIPAA as autoscaler there's a grid.

There are a lot of examples on how to do a custom setup for that.

And so they wrote a custom one that just looks at the size of the Redis q and then scales the pods based on that.

Yeah OK.

This one's more like a traditional application thing basically sending data.

Why a post.

And then it was doing language processing while also saves on a lot of lost in processing then a lot of traffic they had like 50,000 requests per second.

I don't know what it is.

Yeah, I don't know what aligning.

I didn't ask.

But it's like, yeah, the spots where image processing that's what the language processing and the eta plus I had 140 notes or something.

All 64 kicks a friend was kind of crazy.

But yeah.

So yeah, just like that.

Yeah, it is.

I was like, I was really interested in it.

But it's like, yeah, my counterpart was a scientist.

And he was like area using two to are aside each and doesn't have time.

I want to have.

I was like, OK, give me a contract.

I will sign and give you money.

And I will look at it.

Wrote quite well.

But it's like, yeah.

Is the argument I had.

But it was kind of like that.

Yeah Yeah.

I think at the scale that they're doing this example kind of isn't going to be helpful.

But for what it's worth here is that auto scaler and that stuff is all defined in this kiosk.

I'll share it in general office hours.

Jim OK.

Any other interesting questions, guys.

I have a question like him regarding communities like if you have servers that require direct mapping.

I have an ambassador in front of it.

So I was wondering like, oh easy or hard it is to get the raw socket there for a couple thuds and then doodle bunting.

You should read these suckers.

Let's see if I just to say to my own words you want to, you want a dedicated IP first service.

I don't really care about it.

Nothing I didn't understand lol.

Basically I have like a couple servers that are only raw socket connections.

Yeah I'm trying to root them using ambassador while location really matter.

But like something in front of it.

So they can act like a unique IP with a different public port and interest not due to the internal port gotcha.

I don't have any feedback on that one.

Somebody else want to chime in.

Maybe with the ambassador experience or alternative energy mix.

Or unbought or what.

Sorry Yeah.

Hey Eric Garner's going to question anyway.

Do you play with kids from Windows.

Does work in those games.

Mp one that I'm about to get I'm working on that.

You're a brave man coming here like that.

I'm kidding.

I know I saw you created the channel windows.

It is sweet ops.

Thank you for that.

I guess it's about time.

This is not the Yeah, it's not the same OS used to be.

But I can't help with that someone else you're beautiful.

Just about anybody stuck in it.

But what I'm asking.

Yeah, I'm dreading the day I will have to do that.

It's coming though.

It's in the future.

Yeah well I'm driving.

So what is it is what it is.

The last time I had to do windows knows I used as Azure Service Fabric and it sucked.

Well, let's see here.

So Andrews as you're on the call and you'd asked a week or so ago or two weeks ago over the holidays kind of like how do we do these chat notifications actually.

Perfect segue way because Carlos was the one who recently created this channel here.

So how do we set up this automatic notification when new channels come up.

So it's a pretty quick and easy demo.

Basically here I have a link here somewhere.

So do many of you guys use active today, or savior.

I never say I use it for our CNN integration with one of our products.

My mouse has.

So yeah.

This is just a deep link into our accounts.

I don't have to search around for it.

This is the app that we have that handles that.

So basically, with the zip here you can have all these different integrations to the products you use Gmail slack MySQL plus grass GitHub et cetera, et cetera.

And in this case, the application is when a new channel is discovered via web hook integration, then it will.

Here's an example.

When I set this up a year ago.

But then here's the metadata that came back and who created that channel.

So then we can just jump over to the next message, which with next step, which is to send a custom Channel message.

And what I love about it is you can really customize everything about that message and how it gets sent.

And it makes it very easy to just add fields that are available from any of that data that was passed from previous step.

And we've taken this to the extreme, you know in our account we have here in our account we handle almost 20,000 zaps every month.

And we have over 111 of these across our company.

And what we do to automate various aspects from things that happen in Gmail things that happen in QuickBooks things that happen.

And build out com all that we automate send it into our Slack channel as simple as view for what's going on with Social Security's app and a task could just be overloading.

Oh, OK.

Yeah, exactly.

Exactly I looking at the pricing and it's like, OK, the free play and you get five apps and 100 task execution.

So a zap is the is like the code is the program.

OK you get unlimited programs.

And then how many executions of that.

OK So it is another way of thinking about it, especially now that they have code steps.

So you can run JavaScript.

You can run Python code.

So it's almost like just a goofy for doing lambda and then they provide all the integrations to all these different services.

And it becomes the glue to tie everything together.

Yeah the downside since this is a ops oriented group.

There's no API.

Ironically there's no API to zap here.

It's really strange and because there's no API.

Exactly there's also no zaps to automate zappia in that way.

Which is unfortunate.

So you can't do zap erase code no Terraform module or anything.

Well, so disappointed.

What happens when you run out of your 20,000 packs or whatever.

It's a shelf.

It sucks.

They require you to upgrade to the next plan.

They don't have an overage model.

So if you.

So if by the 16th.

I max out this 1,000 zaps here.

I'm going to have to upgrade again.

Another $100 a month or whatever.

Yeah Yourself though.

So we create 1000 channels small and well I say reset.

So we create one, I want to go create an idea.

Don't do that.

Look look look.

How can we cost Eric money.

Let's do that.

OK Yeah.

That's funny.

OK How much.

It seems like that.

It seems like it seems like it would be not that hard to write to create, a lambda or something that you hooked together with a broken leg.

A true developer my friend.

Spoken like a fruit to developer.

Could you really just land.

You can with slack.

You can set up web books.

Yeah, no.

And the reality is there's a open source.

Zap your alternatives.

It's feel you know buy versus build.

Mentality it was just posted on Hacker News.

There's in good students or Huggins which h right here can get something.

Well, I see it there.

Hug Im I think it's huge.

Thank you.

The agents that monitor and act on your behalf.

Like I'm so blind here.

Agents that.

Yeah Georgie I and I just skip it.

Just go back to just this is the funny thing.

Yeah, just go back up there.

You got a different hash.

Wow Yeah.

Linus wow.

It wasn't taken back two months ago.

A woman.

Yeah So yeah, I think it's one of those things depending on what you want to accomplish that could be great.

Also a lot of the zap your integrations are actually on their GitHub.

I just realized the other day.

So they're open source as you can PR them if you need to be interesting if something like human actually worked with zap your plugins but I don't think it does.

Yeah, it's also not pretty.

Oh, it's been great.

I was like plugged in any moment that you added.

Yeah So let's hear it.

Here are the agents that it supports.

Where is the screenshot.

So I don't know how many agents.

It supports today.

I think that's the main thing.

The main consideration is like when you go to apps here literally, I think there are thousands of integrations that it has.

1,500 So it's quite a lot.

And anyone who's worked with third party guys knows they break all the time.

They upgrade they change.

So you might get it working on a weekend.

But it's going to break three weeks from now.

And then you're never going to get back to it, because now you're not on holiday break right.

OK, cool.

Thanks Or any other questions about this or something else.

Nobody using pot man yet.

No, I know they came up a few weeks or a month or so ago.

I know officers this is always a serious.

If they once kick the tires on this project.

I think it's in the incubator, a cube sphere.

If any of you guys think it's here.

So when I got this dashboard.

I want.

Yeah Yeah.

But it combines a lot of things.

And it's kind of interesting.

If you look under the hood on the architecture of what it uses.

It's pretty similar to the components that also first of all.

Here's the UI.

So you know pictures are always pretty.

So it's a nice UI of everything operating in the system.

You could say it's maybe somewhere.

It's something like an shift.

But maybe not as opinionated or as complicated.

I believe it deploys entirely on Kubernetes.

I like shift, which you have to have control over the most US, they have a demo environment you can log in and check out.

But here's the architecture.

I thought was interesting.

Let's see.

Why is not living it looks like ranger or ranger.

Yeah like, yeah, I'm looking at the I'm looking at the screenshots going to wait.

This isn't a rancher.

Yeah, looks like they're similar.

It looks like the GitHub Kamau proxy has bungled this image here is like maybe you can I. When you open up in the incognito.

So glad you're in production yet ranch though uses mostly their own in-house components, though, right.

And isn't built on.

Aside from communities, of course, which are built on, but it's a lot of in-house things versus this.

It looks like.

And I can't see what you would see here is that it uses like Jenkins under the hood, it uses Prometheus and the fun.

And I think it's had fluidity and it looked like very much the similar stack that we were using today.

I was going to deploy the not operator and I saw it.

Also, because it's on computers and then it's kind of knowing that everyone and everything is deploying its own dependencies.

A little bit.

I kind of like I it all the time.

And knows me.

I don't know where you're deploying.

What was it nuts.

It pops out alternative and operate off nets also deploys parameters and co-founder and all that stuff.

And I like my reach as operator.

What was it like.

There was one a bundle Prometheus with it.

Yeah Fox is doing the same.

It's like, yeah.

OK, I get it.

You need it, but it's like it's always included right now.

It's like so.

So from experience.

I think I have the answer.

Now to that.

But I am equally frustrated.

So we support a product called cute cost.

We have a channel 4 that's in this meetups that keep cost channel there and cost is a cost.

Like this visualization tool for Kubernetes, and your cloud at your cloud provider.

So like all sports to get and we'll show you how much that's costing in real time estimations of course.

But if two ships its own version of Prometheus and I shook my fist at them and said, why are you doing this to us you know we spent a lot like scaling Prometheus is non-trivial.

You can't just throw Prometheus and expect it to actually work in production.

You can do it for a great example and make your charts very presentable but it's not the way forward.

And then worse yet they didn't support a way to have your bring your own Prometheus so that they made it worse.

Well, we spent probably like a month on the integration effort trying to get there.

Keep costs working with our Prometheus and it just there's so many settings and there's so many assumptions and there's so many things that make everyone's Prometheus installation kind of different.

Especially, I guess if you're not maybe using Prometheus operate.

So I guess the reason why all these vendors are shipping it is because most people a lack for me is operational experience.

We know how to set it up the right way and see they got to have a successful product install in the first five minutes or people are just going to get bored and walk away.

So I guess that's why Nasa is doing that too.

And I can only assume that it's just like, that's fucked up.

I mean, it's not even using that scanning or something just like monitoring it doesn't have any use.

It's not utilized at all.

Only scaling something into just like it's there.

So I guess I guess I guess what we got to get more comfortable or not the right word.

Maybe or so.

I mean, the built in Prometheus the architecture is pretty nice right.

Prometheus can scrape other Prometheus is.

So I guess what.

We have.

So I guess really what we should be adopting is a pattern of more getting more comfortable with just scraping other Prometheus is an aggregating it rather than expecting all these third party services to use our Prometheus Yeah.

Just looking at the memory using obscene.

Can we use it open positive post, for example.

It's not much.

It's a 150 megabyte and being about.

But it's like still it's just an awesome idea Federated and died scraping basically from my Prometheus operator and it still is like one more component that you have to worry about.

And it's the same component.

And if someone tells you.

Hey, my computer since I'm working.

Which one is it.

Yeah Yeah, right.

So if you guys use titan or other like aggregator for Prometheus.

Like storage baggins.

Yeah Yeah.

Yeah, it's more like a federation also.

No, I only belch up.

I Volta as I function to actually save everything to pop search explode in search of search to have it acceptable that this came up recently also when talking with a customer and also I know Andrew Roth.

He does what we are doing and that I was actually going to chime in and give an update.

So now it's been probably two, three months operating.

Yes on the efforts for production cluster.

And it's working well.

But there were some growing pains along the way.

The two biggest problems we had was one was if you don't have the memory limit set correctly on previous overtime it will just crash and when it crashes, it means these wall files around and you can't Auto Recover.

After that, you have to exact into the container and delete as well.

But the other interesting thing we noticed from the Prometheus is.

So I forget what version it is.

But the latest version of Prometheus under Prometheus operator.

This is second hand.

One of my teams team members was relating this to me.

So forgive me if there's some loss of precision here.

Is that it will auto to discover what your memory limits should be for Prometheus when you deploy it, which is cool.

However, we noticed that when it was auto discovering those limits.

If we didn't have at least a minimum of 11 gigs available to Prometheus then it didn't matter what it set those limits to automatically it would always inch up against that hard limit and then get killed by the memory reaper on the Linux kernel.

So once we bump that up to 15 gigs it's now sitting under that limit and being respectful of everything and works.

We didn't dig deeper into why it didn't work when it was just under allocated.

All right.

Well, we have five more minutes here.

Any final thoughts or interesting news articles that you have on your agenda.

Well, we haven't talked about it yet.

There one thing.

But I don't want to I don't want to waste five minutes and rush through it.

I think you guys will dig this this using how to use CloudFlare as a Swiss army knife and kind of like we as the zappia for the same thing.

You can do a lot of nice dirty, nasty hacks with CloudFlare and workers.

And we're doing that now.

So I wanted to show how you can do content injection.

Page rewrites and proxy basically any site you want.

I did a little bit with called fertile not too long ago.

And I was thrilled with the quality of the Terraform provider for CloudFlare.

I was able to set everything up in Terraform yet.

Now I know they're investing a lot in that.

I think a couple of the people involved in that project are actually in suite ops and I know they've actually reached out to cloud posse on their Terraform provider for feedback oh cool.

Well, yeah, we are they we're on the radar one small project have seen this a couple months ago, I just posted the link in the channel.

It's a native policy management for communities.

It seems nice.

It's a company you like.

I don't remember which company, but they didn't give a presentation is seem interesting for, like you can set this policy, for example, every node needs to have limits set in your cluster or they have more complex examples.

And then it evaluates like which boards are in noncompliance with your policies and also blocks new deployments new policies.

So I thought it was cool.

Yeah, pretty simple way to express it.

Exactly Yes.

Well seals are native to communities.

Yeah star someone already tried out.

OK whatever complaints of policy agent because I wanted to do policy agent that basically trails off.

Basically, you need an approval that's only lead happening.

It's just like something you edit the just older proofs and runs through the eye stuff like that.

I wanted to try out.

Let's go down.

Yeah So some plans.

Now this is probably a topic for another office hours, but one of the things I really want to try and do with this Office Hours now in 2020 is more demos and more demos by our members.

So I know a lot of you are doing some cool things cool things that we don't have a chance to do ourselves or see.

I would like to see that.

So if you guys are working, for example, with OK or somebody kicks the tires on you know I would really like to get a demo of that.

Also maybe we can get some nice other speakers onto our person can ask them questions.

I really want to get you on here and wish you as prolific GitHub contributor to the community's ecosystem.

I thought about something cool that my team is starting to do a lot of work with some file and something that I don't think file has yet period that we miss from Terraform is that Terraform Doc's command.

Yes if there was like a hellfire Doc's command where you know where it would generate a table of all the environment variable inputs Yeah, that would be cool.

The cool thought about doing something like that.

Yeah, well, you know, at least the float the idea in the old file channel.

And let's see if there's some more interest in something like this auto documentation for help file.

Yeah, I'm having to write out the table right now because we were doing a big one for four GitLab and they're sticking point.

Yeah And we've got like 60 input variables for everything it can get unwieldy.

I agree with any.

And then the more anything, the more you pramod tries anything the harder it is to actually deploy.

We discovered that the environment's don't really work the way we expected them to and we weren't able to really use them the way we wanted them to.

If you're going to have a helm file and its purpose is to be a sub helm file meaning like I've got a helm file forget lab and the end user is going to create their own helm file and then it's going to have helm files pass you know get Cole and whatever GitLab get Cole and whatever key get go and would you know that if the sub helm file has environments in it your route helm file needs to have those environments declared and it can't have any other environments.

Yeah You have to do that.

You have to.

Exactly it's kind of an artifact of how file is implemented and the path of least resistance.

But yeah, those environments need to be declared if they exist in all hell and files where they get included.

Yeah, so we ended up scrapping that idea and just doing it because what we wanted to.

It was you know we talked earlier about how I don't I don't like how helm charts default to you know insecure by default.

I want secure by default.

So our home file is going to be the opinionated secure way that we want you to deploy helm file by default.

And then there is an environment variable called local mode or whatever that you can turn on that sets all the other variables period for you into the insecure mode.

So instead of having to settle kinds of stuff know by reading documentation and going have to do here.

Yeah run on my fucking laptop just do like local mode equals true.

And then running your laptop.

Yep So we were originally going to do like the default environment was production.

And then, of environment called local but that didn't end up working out.

I be happy.

If you want to share a little bit of that next call or in a future one I'd be happy to look over them and see if there's another way of doing it.

Or some feedback based on our experience.

Sure All right, everyone looks like we've reached the end of the hour.

That about wraps things up.

Thanks again for sharing.

I always learn so much from you guys on these calls.

A recording of this call will be posted in the office hours channel.

See you guys next week.

Same place same time.

Have a nice one.

But if you.