10 min read

Managing cloud infrastructure has become an increasingly complex task, especially at scale. To keep up with the demands of modern software development, companies need the organizational and operational leverage that infrastructure as code (IaC) provides. Terraform is a popular choice for implementing IaC due to its broad support and great ecosystem. However, despite its popularity, Terraform is not a silver-bullet solution, and there are many ways to get it wrong. In fact, even the most experienced practitioners can fall victim to nuanced and subtle failures in design and implementation that can lead to technical debt and insecure, sprawling cloud infrastructure.

This article explores some of the common mistakes and pitfalls that companies face when attempting to implement Terraform for infrastructure as code. We won't focus on fixing a failed plan or when a “terraform apply” goes sideways but rather on the underlying design and implementation issues that can cause small problems to snowball into major challenges.

Design Failures

The initial choices made when designing a Terraform-based cloud infrastructure implementation will be some of the most consequential; their impact will ripple throughout the project's lifecycle. It is beyond critical that engineering organizations make the right choices during this phase. Unfortunately, poor design decisions are common. There is a persistent tendency to focus most of the design effort on the application itself, and the infrastructure config is neglected. The resulting technical debt and cultural missteps can grind technical objectives to a halt.

“Not Built Here” Syndrome

Otherwise known as “re-inventing the wheel”; engineering teams often feel compelled to design their own configuration from scratch, regardless of complexity or time commitment, because they “don't trust” third-party code, or it isn't precisely to specifications. When engineers insist on building their own solutions, they may duplicate work already done elsewhere, wasting time, effort, and resources. Re-inventing the wheel may also be indicative of underlying cultural issues; isolation from external innovations or ideas, as well as resistance to change, will significantly hamper the effectiveness of DevOps initiatives.

Not Designing for Scalability or Modularity

Too often, infrastructure implementations aren't designed to take full advantage of one of Terraform's most powerful features: modularity. Designing application infrastructure for scalability is a must-have in the modern tech ecosystem, and Terraform provides the tools to get it done.

What often ends up happening in these scenarios is the following:

- Design phase finishes; time to build!

- There is a significant push and effort to get a working application stack shipped.

- Some Terraform configuration is hastily written in the root of the application repository.

- Version 1 is complete! The compute nodes and databases are deployed with Terraform. Success!

Unfortunately, step 5 never comes, and the entire process is repeated when it comes time to deploy a new application. If the existing application stack needs an updated infrastructure configuration, such as a new region, it's just added to the same root module. Over time, this grows and grows into an unmanageable mess. What about deploying to different environments like QA or Staging? What about disaster recovery? Without the use of modules, engineers are forced to duplicate efforts and code, violating the principle of “Don't Repeat Yourself” and creating a huge pile of tech debt.

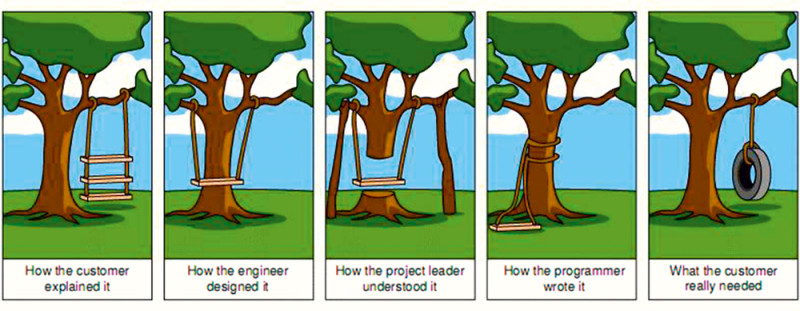

No Design Collaboration with Developers

One of the original goals of DevOps was to foster closer collaboration between development and operations staff. Sadly, modern development environments still maintain unnecessary silos between these two groups. Nearly fully formed application designs are kicked to DevOps and Cloud engineers to implement without collaboration on the design and its potential issues; literally the antithesis of DevOps culture.

A key indicator of success in modern software development is the number of successful deployments over a given period of time; deployment velocity is crucial to achieving business goals. If design choices and feedback yo-yo between disparate groups and engineering teams are at odds over technical ownership and agency, then deployment velocity will suffer.

Unrealistic Deadlines or Goals

Design failures and breakdowns can lead to various issues, including the burden placed on engineers. As a result of these problems, engineers often face unrealistic goals and are expected to meet unreasonable deadlines for project implementation.

Feature delivery doesn't take into account the effort cost of the implementation of infrastructure as the design was done in a vacuum. Looming deadlines inevitably lead to shortcuts, which leads to tech debt, which leads to security issues. Engineers classically underestimate Time & Effort and the likelihood of getting it right the first time. The planning was done without considering infrastructure goals or security outcomes, assuming a “perfect world” without interruptions. Then the “real world” sets in, and the inevitable firefighting kills a lot of projects before they ever get off the ground.

Implementation Failures

A well-thought-out design is crucial, but ensuring that the implementation phase is executed effectively is equally essential. If good design is not followed by proper implementation, engineering teams may find themselves dealing with a new set of challenges. Design failures, if caught early, are easier to rectify. Implementation failures tend to be far more costly and time-consuming.

Not Enforcing Standards and Conventions

Terraform codebases that have never had naming standards or usage conventions enforced are a mess, and it can be tough to walk back from this one once it has set in at scale.

Common breakdowns with standards and conventions include:

- No consistent way of naming deployed resources (

prod-db,db-PROD). - Inability to deploy resources multiple times because they are not adequately disambiguated with input parameters.

- Hardcoded settings that should be parameterized.

- One application stack separates folders by the environment; another might use one root module with multiple workspaces. Others stick all environments in one root module with a single workspace with three dozen parameters.

- Sometimes a data source is used to create an IAM policy, or a

HEREDOC, other times it'saws_iam_role_policy. - One configuration organizes resource types by files; another organizes application components by files.

Here’s an example of an inconsistency that’s very hard to walk back from: the resource naming choice of hyphens vs. underscores: Terraform resource names using a random mishmash of snake_case with CamelCase and pascalCase:

resource "aws_instance" "frontend_web_server" {

...

}vs.

resource "aws_instance" "frontend-webServer" {

...

}(and by the way, why are these web servers static EC2 instances anyways!)

While both resource declarations will correctly create an EC2 instance, even if their configuration is identical, they'll have different resource notations and references in the state file and Terraform backend. This may seem innocuous, but it becomes a much bigger problem in larger codebases with lots of interpolated values and outputs.

These little inconsistencies can add up to untenable tech debt over time that makes understanding the infrastructure complex for newer engineers and can engender a sense of fear or reluctance towards change.

Allowing Code and Resources to Sprawl

As mentioned in a previous section, Terraform provides a variety of conventions to enable modularity and the DRY principle: modules and workspaces. Terraform deployments with repeated code are hard to test and work with.

When greeted with a sprawling, unmanaged tangle of Terraform resources, engineers often follow their first impulse: start from scratch with a greenfield configuration. Unfortunately, this approach often exacerbates the existing problem. Resource sprawl represents a two-fold problem for most software organizations: it leads to increased costs and a weakened security posture. You can't secure something if you don't know it exists in the first place. Using hardened Terraform modules enables the creation of opinionated infrastructure configurations that can be reused for different applications, reducing duplicated effort and improving security. Workspaces can be used to extend this pattern further, isolating environment-specific resources into their own contexts. Terraform also has a robust ecosystem of third-party modules; it's possible your team can have a nearly complete implementation just by grabbing something off the shelf.

Not leveraging CI/CD automation

Continuous Integration/Continuous Delivery (CI/CD) pipelines are the foundational pillar upon which modern software delivery automation is built. They enable software to be checked in, tested, integrated, and deployed faster and without error. Terraform code should be treated like application code: checked into version control, linted, tested, and deployed automatically.

While it's possible to deploy Terraform infrastructure from a single workstation, it's usually the first usage pattern developers and engineers employ when learning Terraform. However, single-workstation deploys are not suitable for large-scale application environments with production deployments. Terraform provides several features that are meant for a multi-user environment, including automation command switches, remote-state management, and state locking. Laptop deploys don’t scale in team environments and represent a single-point-of-failure (SPOF) in infrastructure engineering and offer no audit trails.

Not using policy engines and testing to enforce security

Policy engines like OPA and Sentinel can enforce security standards, such as preventing public S3 bucket configurations or roles with overly broad permissions. However, it depends on automation to implement at scale properly. IAM solves the problem of how systems talk to each other while limiting access to only what's needed, but it isn’t sufficient for automation. Organizations that don't implement policy engines to check infrastructure configuration are often left blind to insecure or non-standard infrastructure being deployed. They depend on manual, time-consuming, and error-prone manual review. Policies provide the necessary guardrails to enable teams to become autonomous.

Operational Failures

Operational failures can significantly hinder the success of Terraform-based cloud infrastructure projects. These types of failures are expected not just for infrastructure but all types of software engineering and application development: organizations tend to neglect soft, non-development initiatives like consistent documentation and maintenance of existing systems.

Not Building a Knowledge Foundation

Engineering organizations often don't devote the time or resources to building a solid knowledge foundation. Documentation, architecture drawings, architectural decision records (ADRs), code pairing, and training are all needed to help ensure continuity and project health.

The consequences of not making this critical investment won't necessarily manifest right away– the first-generation engineers to work on the project will have the most relevant context and freshest memory when confronting issues. However, what happens when staff inevitably turns over? Without the context behind important implementation decisions and a written record of implicit, accumulated knowledge, project continuity and the overall sustainability of the infrastructure will suffer. Documentation also provides valuable information for stakeholders and non-team members, allowing them to onboard to a shared understanding without taking up the valuable time of project engineers.

Re-inventing the Wheel: Part 2

Without a solid knowledge foundation, new engineers and project maintainers will be tempted to start from scratch rather than take the time to understand and ultimately fix existing implementations. Starting a new implementation over an existing, unmaintained one leads to a “Now you have two problems” situation.

Facilitating knowledge transfer should be one of the primary goals of a knowledge base. Documentation helps transfer knowledge between team members, departments, or organizations. It provides a reference for future developers who may need to work on the system, reducing the risk of knowledge loss and ensuring the project remains maintainable and scalable.

Focusing on the New Without Paying Down Technical Debt

Once a project is shipped, it is quickly forgotten, and all design/development effort is focused on the next new project. No engineer wants to get stuck with the maintenance of old projects. Over time, this leads to the accumulation of tech debt. In the case of Terraform, which often sees several new releases per year with breaking changes, this means older infrastructure configurations become brittle and locked to out-of-date versions.

Organizations that want to fix this need to approach the problem in a cultural context; only rewarding new feature delivery is a sure way to end up with a lot of needless greenfield development work. Creating a technical culture that rewards craftsmanship and maintenance as much as building new projects will lead to better, more secure applications and a better overall engineering culture.

Getting Terraform Right Means Leveraging the Right Resources

Avoiding the failures described in this article isn't strictly a technical exercise; fostering a good engineering culture is essential. This involves making pragmatic and forward-looking design choices that can adapt to future requirements. Additionally, embracing Terraform's robust ecosystem of existing resources while avoiding the push to build everything internally can significantly streamline processes and prevent re-inventing the wheel. Organizations that commit to these objectives will reap the long-term rewards of high-performing infrastructure and empowered engineering teams.