Here's the recording from our DevOps “Office Hours” session on 2020-02-21.

We hold public “Office Hours” every Wednesday at 11:30am PST to answer questions on all things DevOps/Terraform/Kubernetes/CICD related.

These “lunch & learn” style sessions are totally free and really just an opportunity to talk shop, ask questions and get answers.

Register here: cloudposse.com/office-hours

Basically, these sessions are an opportunity to get a free weekly consultation with Cloud Posse where you can literally “ask me anything” (AMA). Since we're all engineers, this also helps us better understand the challenges our users have so we can better focus on solving the real problems you have and address the problems/gaps in our tools.

Machine Generated Transcript

Let's get the show star.

Welcome to Office hours.

It's February 19 2020.

My name is Eric Osterman and I'll be leading the conversation.

I'm the CEO and founder of cloud posse.

We are a DevOps accelerator.

We help startups own their infrastructure in record time by building it for you and then showing you the ropes.

For those of you new to the call the format is very informal.

My goal is to get your questions answered.

So feel free to arm yourself at anytime if you want to jump in and participate.

If you're tuning in from our podcast or YouTube channel, you can register for these live and interactive sessions by going to cloud posse slash office hours.

We host these calls every week we automatically post recording of this session to the office hours channel as well as follow up with an email.

So you can share it with your team.

If you want to share something in private.

Just ask.

And we can temporarily temporarily suspend the recording.

With that said, let's kick it off.

So I got a couple of talking points lined up here today.

If there aren't any other questions.

First, some practical recommend nations where one factor out of cabinet is a checklist I put together because it comes up time and time again, when we're working with customers.

So I want to share that with you guys.

Get your feedback.

Did I miss anything.

Is anything unclear.

Be helpful.

Also John on the call has a demo.

A nice little productivity hack for multi-line editing in the US code.

I've already seen it is pretty sweet.

So I want to make sure you all have that your back pocket.

Also Zach shared an interesting thing today.

I hadn't seen before, but it's been around for a while.

It's called Goldilocks and it's a clever way of figuring out how to right size you Kubernetes pod.

So we'll talk about that a little bit.

I am always on the list.

But we never seem to get to it.

Are you hotel ops interview questions.

So Yeah conversation was valuable over that as well.

All right, so before we get into any of these talking points.

I just want to go over any or cover any of your questions that you might have using Clyde technology or just general related to DevOps I got a question.

All right.

Fire away in a scenario where you have multiple clusters.

And you may not be using the same versions across these things.

Let's say you're in a scenario maybe you're actually managing for claims.

How do you deal with the versions of cubes deal being able to switch between cluster versions, and so on.

If you're getting strategies out there that you can actually utilize.

Yeah, I'll be happy to help answer that before I do anybody have any tips on how they do it.

All right.

So what we've been doing, because this has come up recently specifically for that use case that you have.

So I know Dale you are already very familiar with geodesic and what we do with that.

And that solves one kind of problem.

But it doesn't solve all the problems.

So for example, like you said, you need different versions of cubed cuddle.

Well, OK.

So cute cuddle I want to talk about one specific nuance about that.

And that is that you've got a list designed to be backwards compatible with I believe the last three minor releases or something.

So there's generally no harm in at least keeping it upgraded or updated but that might not still be the answer you're looking for.

One thing you wanted to I know we had to address this situation separately.

So that's why what we do is under cloud posse packages.

So this is good outcomes.

Cloud passes large packages we distribute a few versions of cubed cuddled pin at A few minor releases.

Now we think we only have the latest three versions because we only started doing this relatively recently.

But the nuance of what we're doing with our packages is using the alternative system that leave data and originally came up with.

And it's also supported on alpine.

So basically what you can do is you can install all three versions of the package.

And then you point the alternative to the version that you want to use to run those commands.

So that's one option and that requires a little bit of familiarity with the alternative systems and how to switch between them.

I think there's actually a menu.

Now that you can choose which one you want.

But the other option, which we use for Terraform is where you will want to have a bunch of 0 to 11 compatible projects and some zero total compatible projects and you want to have you don't want to be switching between alternatives to run them.

So what we did there was we actually installed them into two different paths on the system.

So like user local Terraform zero 802.11 then.

And that's where we stick the triple binary for that.

And then zero then user local Terraform 0/0 to been slash Terraform that would be the binary there.

So just by changing the search path based on your working directory or where you're at and you can use that version of Terraform you want without changing any make files without changing any tooling like that that expects it just to be Terraform in your search path.

I can dive into either one of those explanations more if you want to see more details on that.

Is that along the lines if you're looking for are you looking for something else.

Yeah Well, so there is a tool that came across before I really got into Judy's which was called tips, which happens like a form where you can actually switch between the versions are getting good.

Yeah, I was hoping that there was something that I hadn't found that was similar to that I would do a switch.

Gotcha Yeah.

There could be.

Like is it.

I just have a thing for Terraform also you're working on.

Yeah, I know your question.

Dale was related to Cuba.

But I mean, I'm just speaking for me personally.

I don't like the end switchers like rb ns of the world, the CSSM like the TAF cover version managers and stuff like that for us.

But obviously, it's a very popular pattern.

So somebody else is probably a better answer one.

Yeah OK.

So what.

There's one other idea on that.

Or let me just clarify the reason why I haven't been fond of the version managers for software of the purpose built version managers like that you need an IRB in for a real Ruby or whatever.

And then you need a terrible man for Terraform and then you need a one for coop kubectl and all these things.

What I don't like about it is where does the madness stop.

Like we technically need to be versioning all of this stuff, and that's why I don't like those solutions.

That's why I like the alternative system because it's built into how the OS package management system works.

Do you foresee taking a similar approach for Terraform and Virgin switching with.

So you could do in the future.

Well, so well.

So yeah.

So sorry.

I kind of glossed over it or handwaving so I go to get a cloud policy packages nap.

Now I don't know if you're using alpine and alpine as I come a little bit less popular lately with all the bad press about performance.

So let's see.

So if we go into the vendor folder here.

So we literally have a package for a cube cut all one of 13 one to 14 1 to 15.

So we will continue doing that for other versions and the way it works with alternatives is basically this.

And you don't have to use alpine to do this.

This is supported on the other OS distributions too.

But is you have to install the alternatives system.

And then when you have that, you can switch.

So OK.

So this here shows how to set up the alternatives that you have.

Then there's another command you use to select between the versions available.

And once you've installed all the versions it's very easy to select between those.

I just don't remember what it is up to.

It feels like a fuzzy wuzzy thing.

Yeah, I think he uses FCF for whatever that is.

Yeah You mean in your screen.

Oh, yeah.

It was.

Thank you.

Thank you guys.

It's a public service announcement.

I always forget to share my dream.

And I talk and wave and point to things like, I am.

Yeah So this is.

Yeah So here in cloud posse packages under vendor.

We have all our packages here is where we distribute the minor pin versions of cubed cuddle and then we also do the same thing like for Terraform likes.

All right.

Good question.

Anybody else have any dads that have one more.

If no one else is going to ask.

Also just really reminded me.

But it doesn't look like Andrew Roth is he on the call.

This conversation because Andrew has a similar tool to dude exec that he's calling dad's garage and the conversation was.

So if you look in I forget what channel it was a release engineering.

If you look in the release engineering channel those conversations.

Yeah Next question.

Dale So has anyone actually successfully done any multiple arctic bills for Docker.

Let's say that one more time multi architecture builds.

Oh, interesting.

So you're saying like Doctor for.

Oh, but doctor for arm and doctor for EMT 64 something that correct.

Yeah Yeah.

I'd be curious about that.

No personal experience.

I've been trying to do something while do that kind of build for going to frustrate petrol stop.

I can say is this where your Raspberry Pi could bring it closer.

Yeah So I figured, well, this is part of it.

But I just came across it where I'm really into issues with my Raspberry Pi three beetles where it's using R these seven and a lot of the packages are built to support our V8 which is 64 get to be 70 is 32-bit.

And I've run into the issue where it just would not outright launch little begin.

Figured out it was Dr. architecture that was the issue where some sources will support it.

Some will not.

Unless you're planning to do your own build and all that you know.

So I started to dig into it a little bit more guys like a rabbit hole.

Yes, it was.

It certainly was.

Yeah All this stuff is pretty easy.

So long as you stay mainstream on our protection.

What everyone else is doing.

But as soon as you want to do a slight minor variation.

The scope explodes.

Yeah, I'm at a point where much of an upgrade to the raspberry force.

Yeah And Yeah not better, just forget about it.

No, that's right.

No, but I did learned something new.

So that helps.

Yeah Yeah.

Echo Cole.

Any other questions before we get to a demo.

John, do you want to get your demo setup.

And let me know when you're ready to do that.

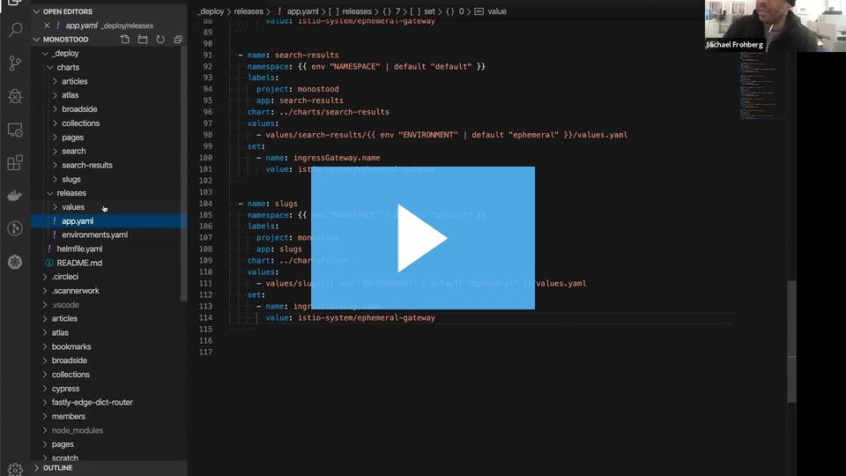

Yes code demo.

I'm ready to roll.

You ready to roll.

OK, let's do it.

I'm going to hand over controls.

Go ahead.

Oh, OK.

So just a quick introduction to everyone here.

So John's been a longtime member of suite ops.

He's hard core on form and developer hacks and productivity.

He gave a demo last week that was pretty interesting.

Yeah, check it out.

That was on using Terraform cloud and the new trigger triggers that are available in Terraform cloud.

So having him back on today he's going to show a cool little hack on how you can do multi-line editing which isn't something I almost.

I've seen it before.

And I kind of forgot about it.

And it's something like you almost don't think I don't need that.

But when you see it, you'll see that you need it.

And want it.

And I actually did as a quick follow up to the Haskell demo last week for run triggers and I did talk with them today and they aren't looking at doing a few UI enhancements as noted it is a beta right now.

So it's not meant for production use.

But we did talk about some use cases and some potential UI improvements and things like that.

But they are definitely excited about the feature.

So let's go over.

We see some stuff going on there.

But as far as multi-line editing.

So I'm a pretty big on efficiency.

And I hate wasting time.

And so it always.

I was managing a group of developers.

And I would always be frustrated watching them edit something because I tell them, hey guys if we're doing Terraform let's say, for instance, to keep it relevant hey go ahead and put these outputs and let's do an output for, let's say all of the available options here.

Let's do an output floor for those.

And it would basically go something like this.

All right output something output.

If I could type.

Typing is hard.

Something else.

And so you're just going through and slowly typing all of these outputs, which are all basically, let's say outputs from the telephone documentation.

And so you know I started showing them these little performance tweaks performance hacks to kind of improve on that speed.

And so what I'll do here is actually take all of these arguments for EC2 let's say if we were wrapping an EC2 instance.

And we wanted to provide all of these outputs and this is generally in the case of I mean, this is right now in the case of Terraform but it could be anything.

So we have a couple sections here.

Note we don't really care about.

So I'm just going to highlight the note.

The colon and the space and use commands to actually get rid of those.

So now we're basically dealing with just outputs.

Now, the key to multiplying editing is to find something that's common.

So the shortcut by default in Visual Studio Code your shortcuts may be different.

To do a single multi-line selection is commanding.

And so if I just use Command B on a is going to go through and select every day if I use Command Shift l it's going to select every a in the left in the document that I'm currently editing.

So it's very important to find something as unique if I just do a you can see here like PBS dash optimized single tenant CBS backed.

That's not really going to give me what I'm looking for and what I'm really looking for is to take the left side of this as the label on the right side as the description.

And so I can kind of get a little better by selecting the space around the dashes but then I still end up getting because normally they do just optional, but in this case, they did optional dash.

So it kind of catches this one as a little bit off.

So when you can't find something that's unique across all of them.

One thing that every document has is Lindbergh's.

And so if you have basically everything on a single line you can find all the line breaks and just use that.

And in this case, I'm going to actually get rid of these empty line breaks here.

So we can just have a clean structure.

And so if I basically go to the end of the line shift right arrow Command Shift l and then go back with my left.

I'm basically editing every single line.

Now I'm just at the end of all the lines.

So now I can move around and operate as if I'm on a single line, but I'm editing every line.

So if I'm doing an input here I can just type as I normally would.

And at this point, I'm inputting all don't remember how many variables it was but all 30 or 40 variables here and now have all of my descriptions filled out.

So that thing we can do is we know that for, let's say all of these options we don't want to have that in the documentation, because we're going to automatically output that we can just highlight all of the options Command Shift they'll delete it.

And then we can give it some default value as well.

And so the key is actually just moving around with command to go to front or back of line option to go by word as opposed to going by individual characters.

Because if you see line six here.

I'm here on start.

But I hit Option right arrow the same amount of times.

But here.

I'm in a different position because the length of the description changes.

So if I need to get to the beginning or to the end.

I need to use my command keys in order to more quickly get to that space.

But this is a very quick way as you can see, even with all the talking I've been able to adding all up.

Add in all of these values pretty quickly edit them get them in a massage place.

And now I can actually go in and tweak anything that I need to tweak.

There are times like this where you're not going to have as clean of a set of data.

I guess you could say so you may have to come in and make some manual adjustments or something like that, just to get this cleaned up.

But that's it.

Any questions around that.

I'm happy to answer.

But this is I just found us being something very important when I started creating modules and especially when I needed to output a whole bunch of outputs or have a whole bunch of inputs in that position.

Yeah, that was really helpful.

I've had that problem with outputs especially when you're writing modules and you want to basically proxy all the outputs from some UPS the upstream resource back out of your module.

I think this would save a lot of time.

Any questions, guys.

So it was good.

I've seen is a pretty common trend.

Like if you want to output multiple attributes of a single resource people define them individually.

Something I started doing was just outputting the entire resource itself.

Loving the end user choose from that map.

They want what they kind of want to interact with.

So is that a bad practice.

I know I've really seen it in public modules.

But I try to BBC I pretty much as output the entire VPC resource.

And then you can access it with dot notation just like you would.

The actual resource Yeah.

So you're on a terror form.

I want to correct.

So this was not something we could do in.

And so you had to output.

Every single one of these.

I switched also to doing a little bit more of just return the resource.

It's a lot better.

It's a lot easier to manage and maintain on that front.

But I guess there are some cases where you may want to adjust those things like if you have a case where you need to do.

Let's say out module.

Let's say you have an AWS instance, and you have multiple of those.

Something like that.

You may not want to output that as an idea and you may be using count just to hide it.

So this is where you kind of get into that place of doing these sort of things to kind of bring them into one output.

So I think there are some cases where you may want to do some of these custom outputs.

But yes, in general, I do like that you can return side got x I have underlined that question.

So does anyone know how can we do count dot index when we actually put resources for loop.

There's actually a few ways with a for loop it kind of depends on your scenario.

But there's a few different approaches.

I think actually the documentation is generally pretty good here on that.

So if I can find it real quick always go to a few different spots here.

Might be under expressions.

Finding it is always difficult for me.

Here anybody remember where it is it says four and discourage.

I think that's how you do the final.

Yeah So my question here is you know why I want to use for ages.

You know when you are using count.

And then you more do things maybe more the index I find on a list or maybe remove some items count actually messes up the whole sequence part.

So if you're using four for each eye creates you know results for each game.

Each item separately.

So it's kind of a set of the things or maybe a list of the things now.

But I still want to utilize the index update and had you know say, for example, if there is an area on the list, which has five items in it.

I want to trade on each of the index like zero 1, 2, 3, 4.

So I know what we were able to do it with the count.

I also do to find a way to do that similar end of stuff for each yet.

So I mean, the for each returns a map.

So what you could do is I think that use the values function to just access.

And it basically that will return a list of the values and kind of strip out the keys and then from there, you can column by index.

Oh, OK.

I tested out this page here actually goes through.

There was a place where they actually kind of gave a pretty solid breakdown.

Yeah right here.

And I linked to this in the chat but it's a pretty solid breakdown of when and why you could want to use one over the other.

And it kind of shows you like when to use count y you want to use count.

In this case, you know it's something that is kind of case by case.

Right you can use count for certain things.

But then there's certain things you may want to use a for each loop for.

So I would say it's case by case.

It really depends.

But I do agree with no one that in essence, I think everybody's use to count from 0 1 1.

So a lot of the times when you're transitioning and you want to use for each but then other resource references the original once you kind of get this mismatch.

So where possible.

I've been trying to convert from count to four each and use the keys instead of the indexes indices.

So I think it's just the problem occurs when you had previous code from 0 1 1 where you already have all the logic with contemplates and then switching it over is just an extra pain right.

Yeah So for that, you need to do a lot of DST DMB we trillion trillion more those resources and make that the data file and all graceful another question and maybe you can answer since you did a lot of modules.

So I was working on one.

One project for you know setting of the priorities of the rules and then is not to prioritise that dynamic solely.

If, for example, if we have a beautiful which deletes or maybe destroys and recreated the next room takes a bright step and then actually messes up the whole thing.

So where do you go to find a workaround for that kind of scenario.

I would have to defer that question to Andre who's been doing more of the low level Terraform stuff on like the modules and things like that.

Also, we are doing a lot more just I mean 99 percent of what we do as a company is uncovering eddies and therefore, like the bee rules is not something we deal with.

We just use ingress.

I do know that some of the stuff got a lot easier with zero 12 passing max around.

But I can't I can't give you a helpful answer right now if you ping Andre on the Terraform jam channel a that's at a k NYSE h he'll be able to answer that.

All right.

Thanks, Adam carolla.

I missed the question, but what was the question about the.

Yeah, John probably know the answer.

Yep So the question is for you know setting up the priorities of the rules in the application load balancer.

So when you have defined priorities for you know there'll be like lateral Terraform resource.

And then if there is you know if there is an instance where that rule.

Yes Yes regarding that I mean guess false destroyed if you're changing something.

The next rule takes the priority of it.

And then the whole sequence gets messed up.

So you know I was just looking to get there you know freeze to way where things remain as it is if at all.

If there is a requirement of regulating that list that rule and next rule doesn't get the priority that, yes, it is you actually can control that priority on the configuration.

So there's a priority.

Yeah So I am using that.

However, if there is an instance where you know by chance if that list never requires to get recreate it by, changing some settings, which requires us to get false destroying the default behavior of four it'll be easy.

You know, if I leave if, at least, the rule is deleted it actually changes the priority of all the rules which are coming next to that.

So if you are say, for example, at least that ruling with a priority of number five guess where you created all the rules after a number five will be unless you've had one.

One step about like six would become five 7 would become 6 and 8 would become seven.

I actually haven't seen that.

But up that may be because I always use factors of 10.

So I don't put them one by one.

I'll do like 01 10 20 up to whatever.

Yeah but.

But that may be why I haven't seen that actually.

But in all the primaries, we said we never I never seen them reset once their form applied again.

They were good to go.

Yeah, I play is good.

But if at all.

There is an instance where the results gets recreated that's where it starts freaking out.

So you know there is if you're not, you're not there.

There is a limit of number of rules that you can have and want to be.

And they will still really legacy application of the initial application, which required us to ensure that a lot or the Beatles.

And this is where I actually was able to replicate this issue interesting with how are you.

First of all, are you using you.

You wouldn't happen to be using the cloud posse AWS Albie ingress Dude, are you ingress.

No this is just simple it works on easy is not going to be noticed.

Well, yeah.

So the dad does a little bit of the confusing thing.

So what we did when we were designing our ADA are easy s modules and our modules for Albie we kind.

We made the opinion we took we had the opinion of making them feel like the Kubernetes in an interface for ingress.

So we created a module called from Adobe yes hb ingress which is which defines a rule.

So that it works similar to defining an English pressing Cuban entity even though you're defining a rule for an elite and using, say yes for example.

So when you do it this way, and you're defining a module explicitly for each rule.

And I it's been working for us.

But I can't say just like what John said, if we've encountered this thing where the priorities get re numbered or something like that hasn't been reported yet to us.

But the different part of what I want to make sure was that you weren't using something like account together with defining your rules.

So you know we do have some dynamic parts, which also involves doing this all also, again, again, a reference to the question that I asked prior to that by using for each and.

Yeah Yeah, that makes sense.

So you usually you're not emotionally mentioned the list, and then it starts freaking out.

I think that the problem.

Therefore, you have is probably more related to the module or the way you're doing the Terraform maybe than some fundamental limitation of like LP resource with themselves.

Yeah Yeah.

Because we actually hit an issue where sometimes if we have to recreate a target group like it if you change the name switching from one of the cloud posse modules, there was like a fundamental name change of target groups.

And so to delete sometimes they don't delete properly, they kind of hold on there and you so you can't believe it's hard to do the best thing use.

So we did a lot of manual deleting Warren just at this last week actually.

So we did a lot of manual deleting and I never saw priorities change all the priorities were stayed in line.

Yeah So target group is key.

But priorities only change if you know change the path or something like condition or something where that's where the listener role gets created.

Yeah but once you give it a priority number it should stay there.

I would Yeah.

But I want to I still want to fix the priorities for because those rules that dynamic.

So if you had a part, which is coming from a variable, which is a list.

Then you know you had another.

But then it really, again, speed it up a new listening to the next priority.

And then becomes harder to maintain.

Yeah So maybe look at adjusting your list and utilise a map or something to where you can define like a set priority for those that way when you loop over it.

It's a set priority every single time, no matter if you move it up and down in your list or something else, make it explicit.

Right OK.

All right.

Let's are there any other questions.

So high.

I have a question for my use cases like I kind of want to generate a report like based on like underutilized easy to incent and over Italy's ancient and automated to send the reports to an email.

Like if the incentives are running less than 10% I would like to see the instance list.

And if it's more than 80% I'd like to see the list of incentives.

So I just looking for ideas to achieve this catch up.

So the idea that somebody here might have a suggestion for that we work with mostly just infrastructure managed under Kubernetes and so we're not really concerned about any one instance, in and of itself.

From a reporting perspective.

But the.

Yeah anybody familiar with some tools to generate the reports that he's looking for AWS has the costing users report.

That's kind of built in.

I don't know about the request of emailing but I know that's in there.

Mm-hmm I could turn it into s.a. you could probably do that for sure.

Put this on the track.

It is probably the utilized utilization for some period of time right.

Yeah some period of time, like a week few days like the one of us.

Yeah somebody else comes to mind just feel free to share that either in this chat here or in office hours.

There are also I mean Yeah.

So like on talk of like there's the trusted advisor stuff, which will make similar kinds of recommendations.

And then there's I think they're SaaS services as well.

But I don't think that was necessarily what you're looking for.

Does this space for everything right outsourced totally outsourced go.

Yeah Any other questions.

I sent all right.

Well, let's cover a couple of the talking points here that came up.

Sure I know.

I definitely recognize a few people in the call here using Kubernetes a lot.

So I think this Goldilocks is a will be a welcome utility that was shared Goldilocks we haven't they I only learned of it today what I thought was interesting was how they went about implementing it.

So behind the scenes it's using the vertical pod auto scaler in just notification mode or in like debug mode.

So that it's not actually auto scaling your pods but it's making the recommendations for what your pods should be.

And then they slap a UI on that.

And here's what you get.

So I think why this is really valuable is.

Well, if you saw the viral video that went around this past week about how they dubbed it that famous video with Hitler reprimanding his troops.

Well, they took that, and they said that they parody the situation about running companies and production without setting your limits and how asinine that is.

Well, it's unfortunately, it's a pretty common thing.

So helping your developers and your teams know what the resort what the appropriate limits for your pods should be is an important thing.

So this here is a tool to make that easier just building that.

Like when I joined this team that was an issue as well.

I guess at the same time, companies were getting to companies in an early stage all the experience was not there.

And we will find that over time.

We have a lot of evictions going on within the cluster because we didn't have any resource limits set for these things.

So we actually had to go through the process of evaluating which why this kind of tool.

I didn't know existed until after questions.

Well came about which would really help because we actually implemented data to help us give us some color back in terms of what was going on.

And then started to send more traffic to see what 40 points are to determine that.

So I figured that everyone had their own methodology to determine what those resource limits were going to be as well.

And I saw this question for this flip was posted some equation in terms of how to determine the ratio of limits and you know that it was this whole thing so much looking forward to using this tool to make a determination as well.

Yeah, I think that's basically been what everyone's been doing is looking at either their Prometheus or looking at their graph on us or data dogs and determining those limits, which is fine.

What I like about this is that it just dumbs it down to the point where literally copied copy and paste this and stick it in your home values or you know if you're doing raw resources stick it in there and you're good to go.

There are a couple other tools I've seen specific.

So the concept here is right sizing, so right sizing your pods.

So if you're using ocean by spot in Pets.com so ocean is a controller for Kubernetes to right size your nodes.

Ocean also provides a dashboard to help you right size the workloads.

The pots in there.

So that's one option.

The other option is if you have cube cost cube.

Cost is a open source or open, core kind of product that will make recommendations like similar to trusted advisor.

But for Kubernetes and it also gives suggestions on how to right size your pots.

All right then let's see what was the other thing I add here.

So I asked with some feedback got it got a lot of great conversations going on in the general channel.

If you check yesterday related to this.

We're working on an engagement right now and the customer asks so what kind of apps are should we tackle first for migration to Kubernetes.

And it's a common question that we cover and all our engagement.

So I thought I just kind of whip it up and distill it to the characteristics of the ideal app.

I like to say that pretty much anything can run a Kubernetes the constructs are there the primitives are there to sort.

So to say lift and shift traditional workloads.

But traditional workloads aren't going to get the maximum benefit inside of Kubernetes like the ability to work with the horizontal pod out of sailors and use deployments and stuff like that.

So here are some considerations that I jotted down using one factor as the pattern.

So the total pull factor app.

I'm sure you've been working with this stuff for some time.

You've seen those recommendations before.

It looks something like this.

In my mind that they're slightly dated in terms of the terms as it relates to Kubernetes and it's a little bit academic.

So if we look at the explanations for how these things can work.

So OK.

So first of all, this is very opinionated just talking about like specifically Ruby and using gem files.

But let's generalize that right.

So the concept here is if we're talking about Kubernetes is we still want to pin our software releases.

But we also can just generalize that say distribute a darker and Docker file that has all your dependencies there in and into releases.

So that's kind of what I've done here in this link here.

And it's a check list.

So if you can go through and identify an at the check stuff all of these.

It's a perfect candidate to tackle first in terms of migration.

I like to say move your easiest apps first.

Don't move your hardest apps until you build all that operational competence.

So working down on the list here.

Let's see here.

So there's a little bit opinionated.

But we really feel like Polly repos now are so much easier to work with and deploy it with the pipelines to Cooper and Eddie.

So that's why we recommended working with that using obviously get based workflows.

So this is that you have a pull request review approval process.

So that you're not editing m.

Believe it or not, some companies do that.

We don't want that.

And then automated tests.

So if we want to have any type of continuous part of our delivery.

We need to make sure we have foundational tests.

Moving on then to dependencies that things that your services depend on are explicit.

One thing that we see far too often are hard coded host names in source code.

That's really bad.

We got to get those expected either into a configuration file or ideally environment variables like environment variables because those are a first class citizen.

And it is very easy to later services being loosely coupled.

This is that your services can start in any order.

Your your API service must be able to start even if your database isn't yet online.

It's frequently an older pattern where well, if the API can't connect to the database.

Well, then the API just exits and crashes.

And this can create a startup storm where your processes are constantly in a crash loop and things only start to settle once the back office kick in and your applications stop thrashing so much so hiccups.

So would that then be more on the developing team to ensure that kind of control.

Yeah So the idea is here that if you feel like this is not a hard requirements list.

But these are like, well, oh, if this jogs a memory.

Yeah, that's how our application works, then what we ask is that they change the way their application works.

So that it doesn't have these limitations.

So much actually working with a client that actually they got wind of your list, but it took your list the opposite.

Hopefully not on all the points, but most of them.

Oh no.

In fact, they're still using confusion.

Oh stop right there.

Take me back there.

Yeah Yeah.

Yeah Yeah.

So it sounds like you have a big project ahead of you, which might be changing some of the engineering norms that they've adopted over the years, we've been pretty lucky that most of the customers, like a lot of this stuff is intuitive for that we're like stuff they've already been practicing.

But then there would be like one or two things here.

There that are used like like the one thing that we get bit by all the time is like their application might be talking to S3 and then their application has some custom configuration for getting the access key and the secret access key.

So that precludes us using all the awesomeness of data.

Yes SDK for automatically discovering these things if you set up the environment correctly.

So having them undo those kinds of things would be another use case.

My main point would kind of going over these things would be to jog any reactions like anybody is totally against some of these recommendations or if any of these are controversial or anything that I've missed.

So please chime in if that's the case Banking services ideal services are totally stateless that is that you can kind of offload the durability to some other service some other service that's hopefully not running inside of humanity.

So of course, it's not saying the communities is not good for running stateful services.

It is it's just a lot larger scope right.

Managing a database inside of companies versus using art yes let's see there.

Anything else to call out.

This is a common thing.

Applications don't properly handle sig term.

So your apps want to exit when they receive that gracefully.

Some apps just ignore it.

And then what happens is ease waits until the grace period expires and then just you know speed kills your process the hard way, which we want to avoid.

Sticky sessions.

Let's get rid of those.

Oh, yes.

And this one here.

This is it.

This is surprisingly common, actually.

So we're all for feature flags.

We recommend feature flags all the time.

They can be implemented in different ways using environment variables is an awesome way of doing it.

But making your feature flags based on the environment or the stage you're operating in is a shortcut but not really an effective use of feature flags because you can't test that function.

You shouldn't you shouldn't be changing the environment in staging to test the feature.

You should be enabling or disabling the feature itself.

So that's a pet peeve of mine here and related to that.

It's also not using hardcoded host names even configurable host names in your applications.

That's that if you're running Kubernetes that's really the job of your ingress and your application should not be aware here or even care where you even care.

Exactly Yeah.

Alex siegmund posts in chat here.

See you mentioned on court finding that you should listen on non privileged ports.

But what's the harm of having your application math for 80 for example, if it's a website.

So the harm is really that.

Well, the only way you can listen on port 80 and you're inside your app is if your app starts as root.

And then that's contradictory to saying that you should run non privileged process.

So things that you should not be running your processes as root.

Yes, your container can be as rude.

Your application can start up as rude.

It combined to the port as rude and then it can drop permissions.

But so often things do not drop permissions and then you'll have all these services unnecessarily running as root.

And just for the vanity of the service running on a classical port like 80 is it really required.

And that's not the case.

So exactly so Alex says, aha.

So that's why you should run is not inside of the container.

Exactly So that's our point there.

I think a lot of times what I see is like when the doctor finds that you created because it works as a route.

No one if he goes back and say, all right, let me have a run as a privilege to use those.

So it actually works that way.

And they may hit upon button like the road blocks and don't get to work.

Probably not merely because of a lack of knowledge as to how to do that.

And then say, hey, you know, it works.

Let's just leave it.

And then it just goes out there.

And then something happens, and then that pattern gets replicated right because somebody says, oh, how do I deploy a new service.

Oh, just go look at this other people.

And then they copy paste that stuff over and then it replicates and quickly becomes the norm in the organization.

Yeah Yeah.

So logs.

This is nice to have.

I mean, it's not a hard requirement.

But it really does make a log.

Once you have all your logs centralized in a system like Cuban or any of the modern log systems like simple logic or Splunk is that if your logs are structure you're going to be able to develop much more powerful reports.

And most if you're using any language or framework they a lot of them support changing the way your events are emitted these days.

So no reason not to.

The most important thing is just don't write to this because we don't want to have to start doing log rotation and you can if quotas aren't set up correctly, which they usually aren't.

You can fill up the disk on the host OS.

So let's not do that.

Lastly this is another common problem.

I see is migrations run as part of the startup process when the app starts up.

So if you have a distributed environment you're running 25 3,100 pods or whatever, you don't want all of them trying to run a migration you want to, you want that to be an explicit step a separate step possibly run as a upgrade step in your health release or some pipeline process in your continuous delivery pipeline.

So making sure you can run your database migrations as a separate container or as a separate entry point when you start that container is important.

Same thing with Cron jobs.

We know those can be run in a specific way under communities.

So be nice if those are separate container and any sort of batch processing as well.

So the basics here is that these can run as jobs versus everything else should be either deployments or stateful sets or other primitives like a recommendation registered domain.

Put this under oh the register to the.

Exactly I do that.

Yeah, I should do that.

It's a good list.

So if you have any other recommendations.

Feel free to slack those to me.

I'll update this list.

What's your thought on the read only file system.

You know, that's good.

But you know it's probably good default. It's an optimization.

You know, I make a point here that file system access is OK.

It just shouldn't be.

It should be used for buffering or it should be used for caching or things like that.

But it shouldn't be used to persist data.

So perhaps.

Yeah having it scrapped to your point at your root file system should probably be configured at deployment to be read only.

But then provide a scratch space that is right.

I think I'll take a list and sentiment learned.

So I can.

I follow this.

There you go.

Let me follow up.

Let me know how that conversation runs or goes well.

So we've got five minutes left.

That's not enough time to really cover too much else.

Did this jog any memories or any other questions that you guys have something I know.

Yes, I have one for Kubernetes.

What's your experience with this to you.

I see a lot of hype around it.

Oh so I was actually, hypothetically you know thinking of a solution, which we can make where you can look up the geographic location.

And then we can do it again.

And it releases like release this particular piece to India.

And then the rest of the world to see if there was.

Yeah So any means.

How was holistic business, then.

Yeah And I'm sure there were others here will have some insights on that as well.

I'll share kind of my two bit cents on it.

My my biggest regret with this deal is that its CEO is in a first class cape as it is that service mesh functionality is in a first class thing inside a Kubernetes that we have to be deploying this seemingly high overhead of sidecar cars automatically to all our containers when they go out.

That said, the pattern is really required to do some of the more advanced things when you're running microservices for example, the releases that you're talking about here.

So the thing is that Kubernetes is by itself.

The primitives with ingress and services are perfect for a deal for deploying one app.

But then how do you want.

How you create this abstraction right for routing traffic between two apps that provide the same functionality.

And then track traffic shaping between them or when you run that really complex microservices architecture.

How do you get all that tracing between your apps.

And this are the stories that are lacking in Cuba and 80s out of the box.

So I think service meshes are more and more an eventual requirement as the company reaches sophistication in its utilization of communities.

But I would not recommend using a service mesh out of the box until you can appreciate that the primitives that communities ask.

It's kind of one of these things, we need when people start on E. Yes, I think that's great.

I mean, I personally don't like.

Yes, we spent a lot of time with these yes and I think ECM kind of shows the possibilities of what a container management platform can do.

And then once you've been using.

Yes with Terraform or CloudFormation for six months or a year you start to realize some things that you want to do are really hard.

And then you look over at companies and you get those things out of the box and really.

So this is how I kind of look at service measures.

I think you should start with the primitives that you get on communities.

And then once you realize the things that you're trying to do that are really hard to do.

And that the service mesh will solve.

That's the right time to start.

It's better, to grow into that need than try to just as Eric said just have a litter box and then try to use it for a problem that you don't currently have.

Yeah, I agree on that.

So Glu t.k. price supports for these two out of the box that you know eakins was becoming a big supporter of steel and you know looking at some stats that I've seen out of all of the profit, which goes to Kubernetes 19% of traffic is being sold by service measures to you.

So I don't know what the sample size.

But this isn't what I have seen the random numbers here and there.

So I agree on that.

I have done just for deployment in the Cuban 90s.

And I use traffic for that for it to be flight gross controller and it looks just like you know it wasn't a good fit for that particular deployment, but I don't think you will fly a big requirement of where you know you want to deploy a really complex solution for Ken everyday lives is mostly because that's where I see these two, you can bring in the.

So it can.

It's one way of solving that right.

The other one is to use a rich feature flags by buy something like launch darkly or flag or a couple of these up.

There's a couple of open source alternatives and in that model it requires a change kind of a little bit in your development model, and it requires knowledge of how to effectively use feature flags in a safe way.

But I think what I like about it is it puts the control back in your court versus offloading it to the service mesh.

It's kind of like the service mesh is fixing a software problem while the feature flags are fixing it with software.

Yeah Yeah like we had are quite similar scenario about what we did was to fix it with a feature flex.

So that actually took the model of actually shaping the development to suit that scenario where we could actually roll up features to selective users within our testing group.

And then they would actually do what you need to do from there.

And then we can actually break up for the next couple of weeks.

And so on.

Yep plus you get the benefit of immediate rollbacks right now you can just disable it immediately just by flipping the flag off.

Exactly So go.

So I think it.

I don't think there's a black and white answer on it.

I think that some change to the organization.

Some change the release process making smaller, more frequent changes all these things should be adopted including like trust based development.

Yeah, I agree.

Because I think introducing to their birth their idea behind future flags gives a bit more overhead on the internet operations team and taking it away from the developers themselves.

So unless you're going to train your developers to use steel to actually integrate those feature flights into the deployment.

So I think you have a better chance of using that tool darkly.

I'm starting to do that.

Yeah Yeah.

Good luck setting up the app with all this stuff in the Minikube and doing appropriate tests all that stuff for your development.

I want to know how it goes.

I still want to find some time to get my hands dirty beyond that.

But I may be doing it in a month or something.

How does seem that long term upkeep for that to be a headache.

Yeah All right, guys.

When we reach the end of the hour here we got to wrap things up.

So thank you so much for attending.

Remember, these office hours are hosted weekly.

You can register for the next one.

Bye going to cloud posse slash office hours.

Thanks again for sharing everyone.

Thank you, job for the live demo there.

Yes code was really awesome.

A recording of this call is going to be posted to the office hours channel and syndicated to our podcast at podcast.asco.org clown posse.

See you guys next week same time, same place.

Hey Eric can I ask you a quick question.

Yeah, sure.

So I just ran into an issue like 10 minutes ago and thought hate office hours is happening at clock.

All right, let's.

I do got to run to a pediatrician appointment right away.

So let's let's see if I can spot instances have you managed to deploy a target spot task with Terraform so.

Yes So we use that we have it.

We have an example.

I'm not going to say that it's a good example for you to start out with doing it.

But we have a Terraform.

Yes Yes.

Yes Atlantis module in there.

We're deploying Atlantis as you see as Fargate task using our library of Terraform modules on the plus side, it's a great example of showing you how to compose a lot of modules.

It's a great example of showing you that modules are composed of all.

And it's advanced example.

This example is exactly why I don't like you.

Yes And Terraform they don't go together with you there and we think we have a pretty advanced infrastructure.

It's ready in Fargo.

We're just going to go to Target spots.

So what do you see that module is called.

Oh stop.

Sorry Yeah.

No, no, no, no, no, no.

I don't have spot far gates Spock.

No worries, no worries.

We're going to an area that's interesting to provide provider strategy and see if we can make it do something.

But no worries.

Figured I'd just check.

Yeah, thanks for bringing it up, though.

So misunderstood.

Absolutely All right.

Take care.

But